- Overview

- Definitions

- Requirements

- Dependencies

- Design Highlights

- IO bulk client Func Spec

- Logical Specification

- Conformance

- Unit Tests

- System Tests

- Analysis

- References

Overview

This document describes the working of client side of io bulk transfer. This functionality is used only for io path. IO bulk client constitues the client side of bulk IO carried out between Motr client file system and data server (ioservice aka bulk io server). Motr network layer incorporates a bulk transport mechanism to transfer user buffers in zero-copy fashion. The generic io fop contains a network buffer descriptor which refers to a network buffer. The bulk client creates IO fops and attaches the kernel pages or a vector in user mode to net buffer associated with io fop and submits it to rpc layer. The rpc layer populates the net buffer descriptor from io fop and sends the fop over wire. The receiver starts the zero-copy of buffers using the net buffer descriptor from io fop.

Definitions

- m0t1fs - Motr client file system. It works as a kernel module.

- Bulk transport - Event based, asynchronous message passing functionality of Motr network layer.

- io fop - A generic io fop that is used for read and write.

- rpc bulk - An interface to abstract the usage of network buffers by client and server programs.

- ioservice - A service providing io routines in Motr. It runs only on server side.

Requirements

- R.bulkclient.rpcbulk The bulk client should use rpc bulk abstraction while enqueueing buffers for bulk transfer.

- R.bulkclient.fopcreation The bulk client should create io fops as needed if pages overrun the existing rpc bulk structure.

- R.bulkclient.netbufdesc The generic io fop should contain a network buffer descriptor which points to an in-memory network buffer.

- R.bulkclient.iocoalescing The IO coalescing code should conform to new format of io fop. This is actually a side-effect and not a core part of functionality. Since the format of IO fop changes, the IO coalescing code which depends on it, needs to be restructured.

Dependencies

- r.misc.net_rpc_convert Bulk Client needs Motr client file system to be using new network layer apis which include m0_net_domain and m0_net_buffer.

- r.fop.referring_another_fop With introduction of a net buffer descriptor in io fop, a mechanism needs to be introduced so that fop definitions from one component can refer to definitions from another component. m0_net_buf_desc is a fop used to represent on-wire representation of a m0_net_buffer.

- See also

- m0_net_buf_desc.

Design Highlights

IO bulk client uses a generic in-memory structure representing an io fop and its associated network buffer. This in-memory io fop contains another abstract structure to represent the network buffer associated with the fop. The bulk client creates m0_io_fop structures as necessary and attaches kernel pages or user space vector to associated m0_rpc_bulk structure and submits the fop to rpc layer. Rpc layer populates the network buffer descriptor embedded in the io fop and sends the fop over wire. The associated network buffer is added to appropriate buffer queue of transfer machine owned by rpc layer. Once, the receiver side receives the io fop, it acquires a local network buffer and calls a m0_rpc_bulk apis to start the zero-copy. So, io fop typically carries the net buf descriptor and bulk server asks the transfer machine belonging to rpc layer to start zero copy of data buffers.

Logical Specification

- Component Overview

- Subcomponent design

- State Specification

- Threading and Concurrency Model

- NUMA optimizations

Component Overview

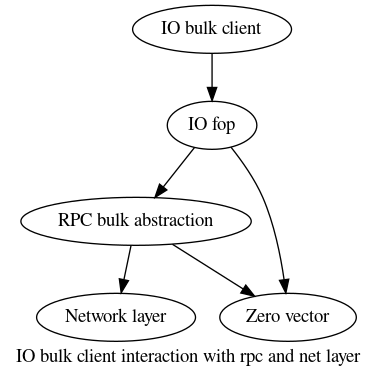

The following @dot diagram shows the interaction of bulk client program with rpc layer and net layer.

Subcomponent design

Ioservice subsystem primarily comprises of 2 sub-components

- IO client (comprises of IO coalescing code)

- IO server (server part of io routines)

The IO client subsystem under which IO requests belonging to same fid and intent (read/write) are clubbed together in one fop and this resultant fop is sent instead of member io fops.

Subcomponent Data Structures

The IO coalescing subsystem from ioservice primarily works on IO segments. IO segment is in-memory structure that represents a contiguous chunk of IO data along with extent information. An internal data structure ioseg represents the IO segment.

- ioseg An in-memory structure used to represent a segment of IO data.

Subcomponent Subroutines

- ioseg_get() - Retrieves an ioseg given its index in zero vector.

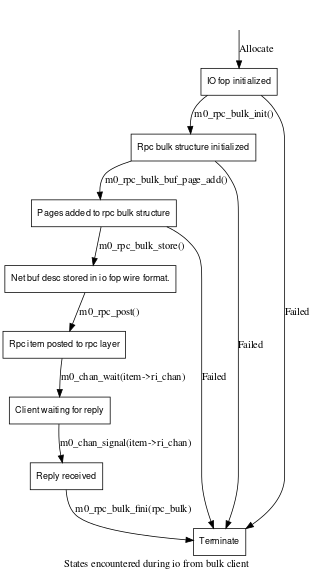

State Specification

Threading and Concurrency Model

No need of explicit locking for structures like m0_io_fop and ioseg since they are taken care by locking at upper layers like locking at the m0t1fs part for dispatching IO requests.

NUMA optimizations

The performance need not be optimized by associating the incoming thread to a particular processor. However, keeping in sync with the design of request handler which tries to protect the locality of threads executing in a particular context by establishing affinity to some designated processor, this can be achieved. But this is still at a level higher than the io fop processing.

Conformance

- I.bulkclient.rpcbulk The bulk client uses rpc bulk APIs to enqueue kernel pages to the network buffer.

- I.bulkclient.fopcreation bulk client creates new io fops until all kernel pages are enqueued.

- I.bulkclient.netbufdesc The on-wire definition of io_fop contains a net buffer descriptor.

- See also

- m0_net_buf_desc

- I.bulkclient.iocoalescing Since all IO coalescing code is built around the definition of IO fop, it will conform to new format of io fop.

Unit Tests

All external interfaces based on m0_io_fop and m0_rpc_bulk will be unit tested. All unit tests will stress success and failure conditions. Boundary condition testing is also included.

- The m0_io_fop* and m0_rpc_bulk* interfaces will be unit tested first in the order

- m0_io_fop_init Check if the inline m0_fop and m0_rpc_bulk are initialized properly.

- m0_rpc_bulk_page_add/m0_rpc_bulk_buffer_add to add pages/buffers to the rpc_bulk structure and cross check if they are actually added or not.

- Add more pages/buffers to rpc_bulk structure to check if they return proper error code.

- Try m0_io_fop_fini to check if an initialized m0_io_fop and the inline m0_rpc_bulk get properly finalized.

- Initialize and start a network transport and a transfer machine. Invoke m0_rpc_bulk_store on rpc_bulk structure and cross check if the net buffer descriptor is properly populated in the io fop.

- Tweak the parameters of transfer machine so that it goes into degraded/failed state and invoke m0_rpc_bulk_store and check if m0_rpc_bulk_store returns proper error code.

- Start another transfer machine and invoke m0_rpc_bulk_load to check if it recognizes the net buf descriptor and starts buffer transfer properly.

- Tweak the parameters of second transfer machine so that it goes into degraded/failed state and invoke m0_rpc_bulk_load and check if it returns proper error code.

System Tests

Not applicable.

Analysis

- m denotes the number of IO fops with same fid and intent (read/write).

- n denotes the total number of IO segments in m IO fops.

- Memory consumption O(n) During IO coalescing, n number of IO segments are allocated and subsequently deallocated, once the resulting IO fop is created.

- Processor cycles O(n) During IO coalescing, all n segments are traversed and resultant IO fop is created.

- Locks Minimal locks since locking is mostly taken care by upper layers.

- Messages Not applicable.

References

For documentation links, please refer to this file : doc/motr-design-doc-list.rst

- RPC Bulk Transfer Task Plan

- Detailed level design HOWTO an older document on which this style guide is partially based.