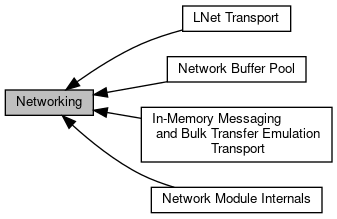

The networking module provides an asynchronous, event-based message passing service, with support for asynchronous bulk data transfer (if used with an RDMA capable transport). More...

|

Modules | |

| In-Memory Messaging and Bulk Transfer Emulation Transport | |

| LNet Transport | |

| Network Buffer Pool | |

| Network buffer pool allocates and manages a pool of network buffers. Users request a buffer from the pool and after its usage is over gives back to the pool. | |

| Network Module Internals | |

Data Structures | |

| struct | m0_net_xprt_module |

| struct | m0_net_module |

| struct | m0_net_xprt |

| struct | m0_net_xprt_ops |

| struct | m0_net_domain |

| struct | m0_net_end_point |

| struct | m0_net_tm_event |

| struct | m0_net_tm_callbacks |

| struct | m0_net_qstats |

| struct | m0_net_transfer_mc |

| struct | m0_net_buffer_event |

| struct | m0_net_buffer_callbacks |

| struct | m0_net_buffer |

| struct | m0_net_buf_desc |

| struct | m0_net_buf_desc_data |

Macros | |

| #define | DOM_GET_PARAM(Fn, Type) |

| #define | M0_NET_XPRT_PREFIX_DEFAULT "lnet" |

| #define | USE_LIBFAB 0 |

Typedefs | |

| typedef void(* | m0_net_buffer_cb_proc_t) (const struct m0_net_buffer_event *ev) |

Enumerations | |

| enum | { M0_AVI_NET_BUF = M0_AVI_NET_RANGE_START + 1 } |

| enum | m0_net_xprt_id { M0_NET_XPRT_LNET, M0_NET_XPRT_BULKMEM, M0_NET_XPRT_SOCK, M0_NET_XPRT_NR } |

| enum | { M0_LEVEL_NET } |

| enum | { M0_LEVEL_NET_DOMAIN } |

| enum | { M0_NET_TM_RECV_QUEUE_DEF_LEN = 2 } |

| enum | m0_net_queue_type { M0_NET_QT_MSG_RECV = 0, M0_NET_QT_MSG_SEND, M0_NET_QT_PASSIVE_BULK_RECV, M0_NET_QT_PASSIVE_BULK_SEND, M0_NET_QT_ACTIVE_BULK_RECV, M0_NET_QT_ACTIVE_BULK_SEND, M0_NET_QT_NR } |

| enum | m0_net_tm_state { M0_NET_TM_UNDEFINED = 0, M0_NET_TM_INITIALIZED, M0_NET_TM_STARTING, M0_NET_TM_STARTED, M0_NET_TM_STOPPING, M0_NET_TM_STOPPED, M0_NET_TM_FAILED } |

| enum | m0_net_tm_ev_type { M0_NET_TEV_ERROR = 0, M0_NET_TEV_STATE_CHANGE, M0_NET_TEV_DIAGNOSTIC, M0_NET_TEV_NR } |

| enum | m0_net_buf_flags { M0_NET_BUF_REGISTERED = 1 << 0, M0_NET_BUF_QUEUED = 1 << 1, M0_NET_BUF_IN_USE = 1 << 2, M0_NET_BUF_CANCELLED = 1 << 3, M0_NET_BUF_TIMED_OUT = 1 << 4, M0_NET_BUF_RETAIN = 1 << 5 } |

Variables | |

| const struct m0_module_type | m0_net_module_type |

| struct m0_mutex | m0_net_mutex |

Detailed Description

The networking module provides an asynchronous, event-based message passing service, with support for asynchronous bulk data transfer (if used with an RDMA capable transport).

Major data-types in M0 networking are:

- Network buffer (m0_net_buffer);

- Network buffer descriptor (m0_net_buf_desc);

- Network buffer event (m0_net_buffer_event);

- Network domain (m0_net_domain);

- Network end point (m0_net_end_point);

- Network transfer machine (m0_net_transfer_mc);

- Network transfer machine event (m0_net_tm_event);

- Network transport (m0_net_xprt);

For documentation links, please refer to this file : doc/motr-design-doc-list.rst

See RPC Bulk Transfer Task Plan for details on the design and use of this API. If you are writing a transport, then the document is the reference for the internal threading and serialization model.

See HLD of Motr LNet Transport for additional details on the design and use of this API.

Macro Definition Documentation

◆ DOM_GET_PARAM

| #define DOM_GET_PARAM | ( | Fn, | |

| Type | |||

| ) |

◆ M0_NET_XPRT_PREFIX_DEFAULT

◆ USE_LIBFAB

Typedef Documentation

◆ m0_net_buffer_cb_proc_t

| typedef void(* m0_net_buffer_cb_proc_t) (const struct m0_net_buffer_event *ev) |

Enumeration Type Documentation

◆ anonymous enum

◆ anonymous enum

| anonymous enum |

◆ anonymous enum

| anonymous enum |

Levels of m0_net_xprt_module::nx_module.

| Enumerator | |

|---|---|

| M0_LEVEL_NET_DOMAIN | m0_net_xprt_module::nx_domain has been initialised. |

◆ anonymous enum

| anonymous enum |

◆ m0_net_buf_flags

| enum m0_net_buf_flags |

Buffer state is tracked using these bitmap flags.

| Enumerator | |

|---|---|

| M0_NET_BUF_REGISTERED | The buffer is registered with the domain. |

| M0_NET_BUF_QUEUED | The buffer is added to a transfer machine logical queue. |

| M0_NET_BUF_IN_USE | Set when the transport starts using the buffer. |

| M0_NET_BUF_CANCELLED | Indicates that the buffer operation has been cancelled. |

| M0_NET_BUF_TIMED_OUT | Indicates that the buffer operation has timed out. |

| M0_NET_BUF_RETAIN | Set by the transport to indicate that a buffer should not be dequeued in a m0_net_buffer_event_post() call. |

◆ m0_net_queue_type

| enum m0_net_queue_type |

This enumeration describes the types of logical queues in a transfer machine.

- Note

- We use the term "queue" here to imply that the order of addition matters; in reality, while it may matter, external factors have a much larger influence on the actual order in which buffer operations complete; we're not implying FIFO semantics here!

Consider:

- The underlying transport manages message buffers. Since there is a great deal of concurrency and latency involved with network communication, and message sizes can vary, a completed (sent or received) message buffer is not necessarily the first that was added to its "queue" for that purpose.

- The upper protocol layers of the remote end points are responsible for initiating bulk data transfer operations, which ultimately determines when passive buffers complete in this process. The remote upper protocol layers can, and probably will, reorder requests into an optimal order for themselves, which does not necessarily correspond to the order in which the passive bulk data buffers were added.

The fact of the matter is that the transfer machine itself is really only interested in tracking buffer existence and uses lists and not queues internally.

◆ m0_net_tm_ev_type

| enum m0_net_tm_ev_type |

◆ m0_net_tm_state

| enum m0_net_tm_state |

A transfer machine can be in one of the following states.

◆ m0_net_xprt_id

| enum m0_net_xprt_id |

Function Documentation

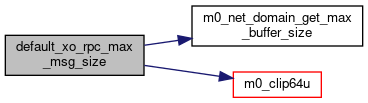

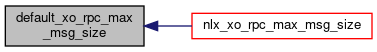

◆ default_xo_rpc_max_msg_size()

| M0_INTERNAL m0_bcount_t default_xo_rpc_max_msg_size | ( | struct m0_net_domain * | ndom, |

| m0_bcount_t | rpc_size | ||

| ) |

◆ default_xo_rpc_max_recv_msgs()

| M0_INTERNAL uint32_t default_xo_rpc_max_recv_msgs | ( | struct m0_net_domain * | ndom, |

| m0_bcount_t | rpc_size | ||

| ) |

◆ default_xo_rpc_max_seg_size()

| M0_INTERNAL m0_bcount_t default_xo_rpc_max_seg_size | ( | struct m0_net_domain * | ndom | ) |

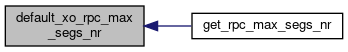

◆ default_xo_rpc_max_segs_nr()

| M0_INTERNAL uint32_t default_xo_rpc_max_segs_nr | ( | struct m0_net_domain * | ndom | ) |

◆ DOM_GET_PARAM() [1/4]

| DOM_GET_PARAM | ( | max_buffer_size | , |

| m0_bcount_t | |||

| ) |

◆ DOM_GET_PARAM() [2/4]

| DOM_GET_PARAM | ( | max_buffer_segment_size | , |

| m0_bcount_t | |||

| ) |

◆ DOM_GET_PARAM() [3/4]

| DOM_GET_PARAM | ( | max_buffer_segments | , |

| int32_t | |||

| ) |

◆ DOM_GET_PARAM() [4/4]

| DOM_GET_PARAM | ( | max_buffer_desc_size | , |

| m0_bcount_t | |||

| ) |

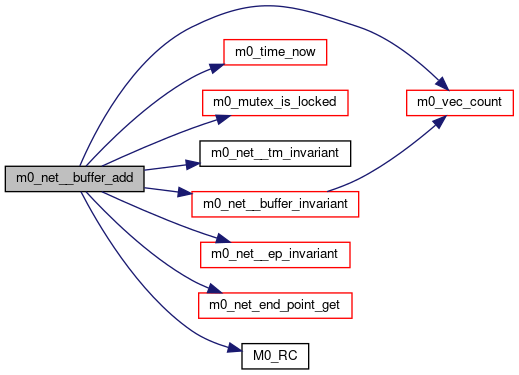

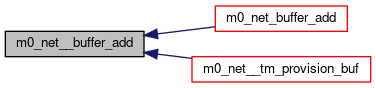

◆ m0_net__buffer_add()

| M0_INTERNAL int m0_net__buffer_add | ( | struct m0_net_buffer * | buf, |

| struct m0_net_transfer_mc * | tm | ||

| ) |

Internal version of m0_net_buffer_add() that must be invoked holding the TM mutex.

- Todo:

- should be m0_net_desc_free()?

Definition at line 130 of file buf.c.

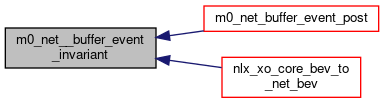

◆ m0_net__buffer_event_invariant()

| M0_INTERNAL bool m0_net__buffer_event_invariant | ( | const struct m0_net_buffer_event * | ev | ) |

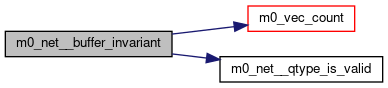

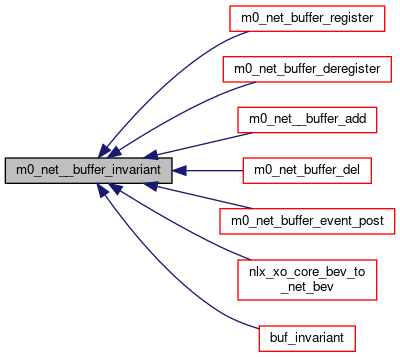

◆ m0_net__buffer_invariant()

| M0_INTERNAL bool m0_net__buffer_invariant | ( | const struct m0_net_buffer * | buf | ) |

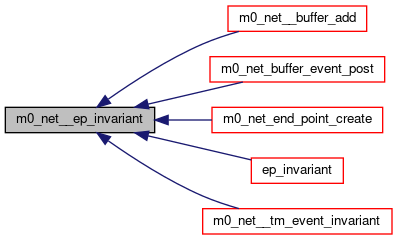

◆ m0_net__ep_invariant()

| M0_INTERNAL bool m0_net__ep_invariant | ( | struct m0_net_end_point * | ep, |

| struct m0_net_transfer_mc * | tm, | ||

| bool | under_tm_mutex | ||

| ) |

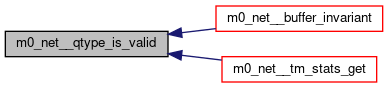

◆ m0_net__qtype_is_valid()

| M0_INTERNAL bool m0_net__qtype_is_valid | ( | enum m0_net_queue_type | qt | ) |

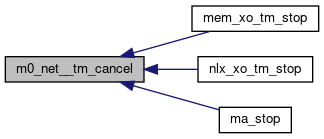

◆ m0_net__tm_cancel()

| M0_INTERNAL void m0_net__tm_cancel | ( | struct m0_net_transfer_mc * | tm | ) |

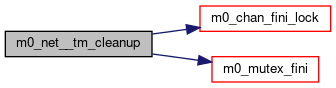

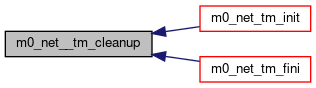

◆ m0_net__tm_cleanup()

|

static |

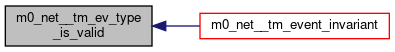

◆ m0_net__tm_ev_type_is_valid()

| M0_INTERNAL bool m0_net__tm_ev_type_is_valid | ( | enum m0_net_tm_ev_type | et | ) |

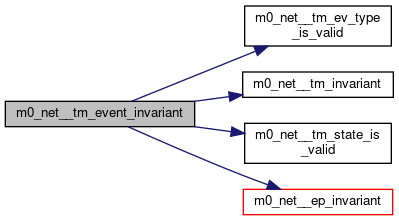

◆ m0_net__tm_event_invariant()

| M0_INTERNAL bool m0_net__tm_event_invariant | ( | const struct m0_net_tm_event * | ev | ) |

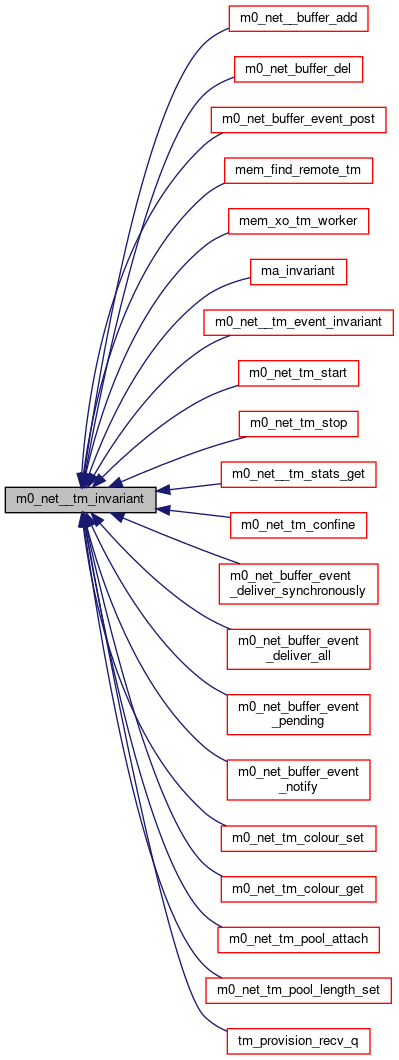

◆ m0_net__tm_invariant()

| M0_INTERNAL bool m0_net__tm_invariant | ( | const struct m0_net_transfer_mc * | tm | ) |

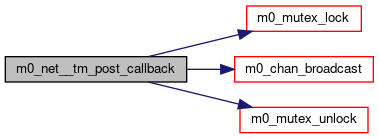

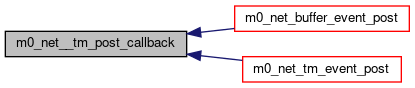

◆ m0_net__tm_post_callback()

| M0_INTERNAL void m0_net__tm_post_callback | ( | struct m0_net_transfer_mc * | tm | ) |

Common part of post-callback processing for m0_net_tm_event_post() and m0_net_buffer_event_post().

Under tm mutex: decrement ref counts, signal waiters

Definition at line 123 of file tm.c.

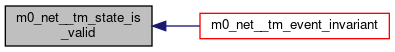

◆ m0_net__tm_state_is_valid()

| M0_INTERNAL bool m0_net__tm_state_is_valid | ( | enum m0_net_tm_state | ts | ) |

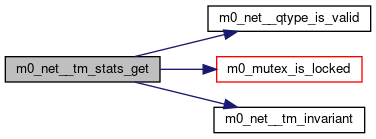

◆ m0_net__tm_stats_get()

| M0_INTERNAL int m0_net__tm_stats_get | ( | struct m0_net_transfer_mc * | tm, |

| enum m0_net_queue_type | qtype, | ||

| struct m0_net_qstats * | qs, | ||

| bool | reset | ||

| ) |

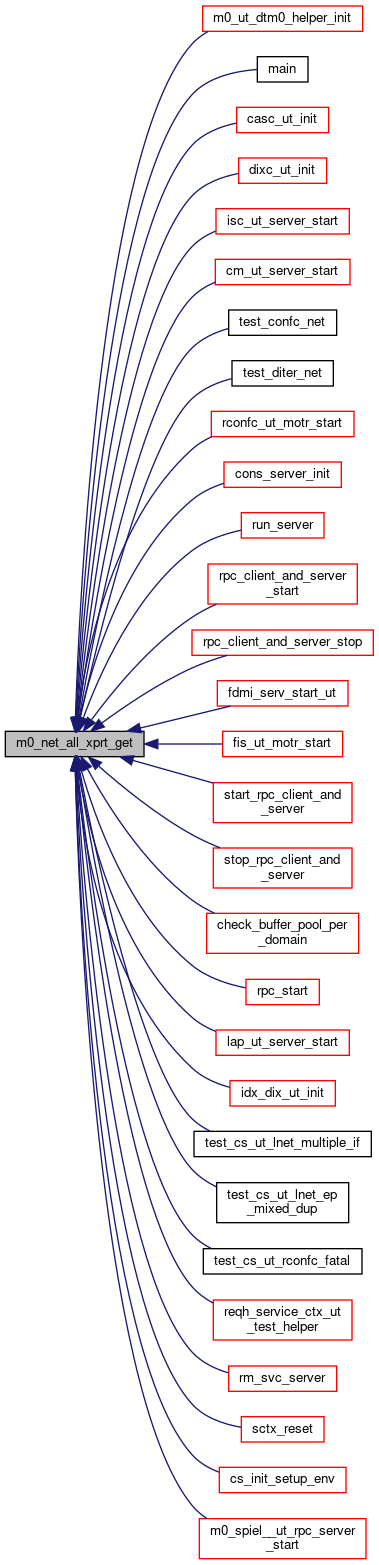

◆ m0_net_all_xprt_get()

| struct m0_net_xprt** m0_net_all_xprt_get | ( | void | ) |

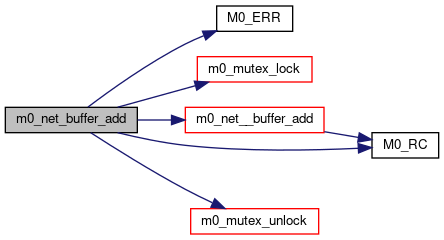

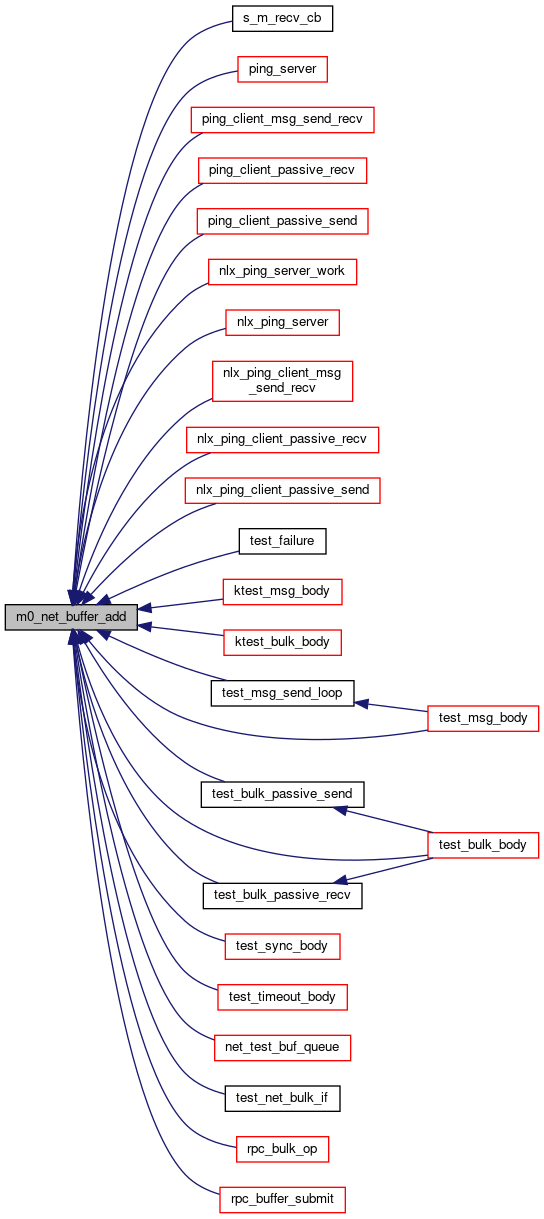

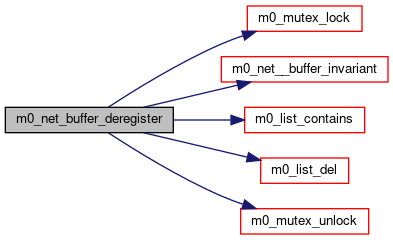

◆ m0_net_buffer_add()

| M0_INTERNAL int m0_net_buffer_add | ( | struct m0_net_buffer * | buf, |

| struct m0_net_transfer_mc * | tm | ||

| ) |

Adds a registered buffer to a transfer machine's logical queue specified by the m0_net_buffer.nb_qtype value.

- Buffers added to the M0_NET_QT_MSG_RECV queue are used to receive messages.

- When data is contained in the buffer, as in the case of the M0_NET_QT_MSG_SEND, M0_NET_QT_PASSIVE_BULK_SEND and M0_NET_QT_ACTIVE_BULK_SEND queues, the application must set the m0_net_buffer.nb_length field to the actual length of the data to be transferred.

- Buffers added to the M0_NET_QT_MSG_SEND queue must identify the message destination end point with the m0_net_buffer.nb_ep field.

- Buffers added to the M0_NET_QT_PASSIVE_BULK_RECV or M0_NET_QT_PASSIVE_BULK_SEND queues must have their m0_net_buffer.nb_ep field set to identify the end point that will initiate the bulk data transfer. Upon return from this subroutine the m0_net_buffer.nb_desc field will be set to the network buffer descriptor to be conveyed to said end point.

- Buffers added to the M0_NET_QT_ACTIVE_BULK_RECV or M0_NET_QT_ACTIVE_BULK_SEND queues must have their m0_net_buffer.nb_desc field set to the network buffer descriptor associated with the passive buffer.

- The callback function pointer for the appropriate queue type must be set in nb_callbacks.

The buffer should not be modified until the operation completion callback is invoked for the buffer.

The buffer completion callback is guaranteed to be invoked on a different thread.

- Precondition

- buf->nb_dom == tm->ntm_dom

- tm->ntm_state == M0_NET_TM_STARTED

- m0_net__qtype_is_valid(buf->nb_qtype)

- buf->nb_flags == M0_NET_BUF_REGISTERED

- buf->nb_callbacks->nbc_cb[buf->nb_qtype] != NULL

- ergo(buf->nb_qtype == M0_NET_QT_MSG_RECV, buf->nb_min_receive_size != 0 && buf->nb_max_receive_msgs != 0)

- ergo(buf->nb_qtype == M0_NET_QT_MSG_SEND, buf->nb_ep != NULL)

- ergo(M0_IN(buf->nb_qtype, (M0_NET_QT_ACTIVE_BULK_RECV, M0_NET_QT_ACTIVE_BULK_SEND)), buf->nb_desc.nbd_len != 0)

- ergo(M0_IN(buf->nb_qtype, (M0_NET_QT_MSG_SEND || M0_NET_QT_PASSIVE_BULK_SEND, M0_NET_QT_ACTIVE_BULK_SEND)), buf->nb_length > 0)

- Return values

-

-ETIME nb_timeout is set to other than M0_TIME_NEVER, and occurs in the past. Note that this differs from them buffer timeout error code of -ETIMEDOUT.

- Note

- Receiving a successful buffer completion callback is not a guarantee that a data transfer actually took place, but merely an indication that the transport reported the operation was successfully executed. See the transport documentation for details.

Definition at line 247 of file buf.c.

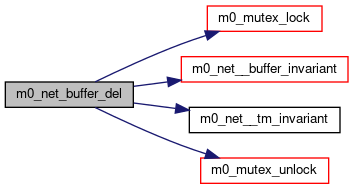

◆ m0_net_buffer_del()

| M0_INTERNAL bool m0_net_buffer_del | ( | struct m0_net_buffer * | buf, |

| struct m0_net_transfer_mc * | tm | ||

| ) |

Removes a registered buffer from a logical queue, if possible, cancelling any operation in progress.

Cancellation support is provided by the underlying transport. It is not guaranteed that actual cancellation of the operation in progress will always be supported, and even if it is, there are race conditions in the execution of this request and the concurrent invocation of the completion callback on the buffer.

The transport should set the M0_NET_BUF_CANCELLED flag in the buffer if the operation has not yet started. The flag will be cleared by m0_net_buffer_event_post().

The buffer completion callback is guaranteed to be invoked on a different thread.

- Precondition

- buf->nb_flags & M0_NET_BUF_REGISTERED

- Return values

-

true (success) false (failure, the buffer is not queued)

Definition at line 261 of file buf.c.

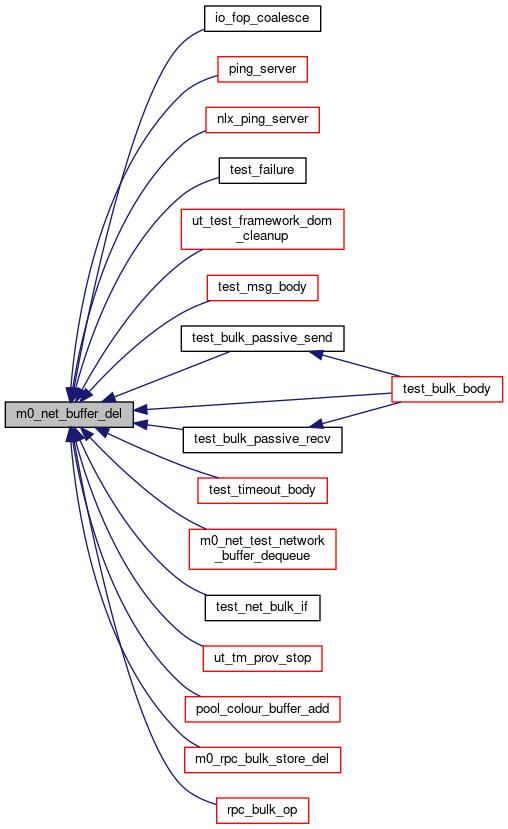

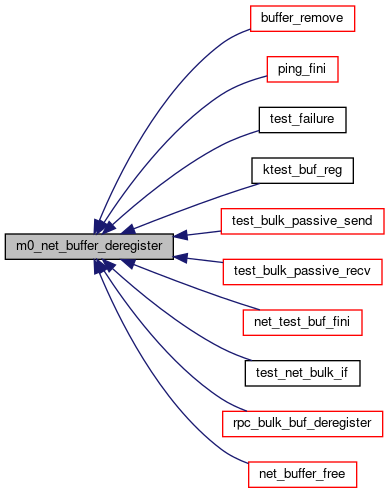

◆ m0_net_buffer_deregister()

| M0_INTERNAL void m0_net_buffer_deregister | ( | struct m0_net_buffer * | buf, |

| struct m0_net_domain * | dom | ||

| ) |

Deregisters a previously registered buffer and releases any transport specific resources associated with it. The buffer should not be in use, nor should this subroutine be invoked from a callback.

- Precondition

- buf->nb_flags == M0_NET_BUF_REGISTERED

- buf->nb_dom == dom

Definition at line 107 of file buf.c.

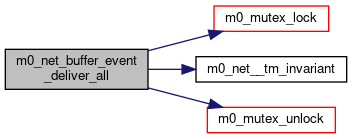

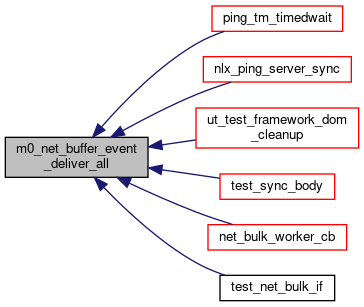

◆ m0_net_buffer_event_deliver_all()

| M0_INTERNAL void m0_net_buffer_event_deliver_all | ( | struct m0_net_transfer_mc * | tm | ) |

Deliver all pending network buffer events, by calling m0_net_buffer_event_post() every pending event. Should be called periodically by the application if synchronous network buffer event processing is enabled.

- Parameters

-

tm Pointer to a transfer machine which has been set up for synchronous network buffer event processing.

- Precondition

- !tm->ntm_bev_auto_deliver

- See also

- m0_net_buffer_event_deliver_synchronously(), m0_net_buffer_event_pending(), m0_net_buffer_event_notify()

Definition at line 397 of file tm.c.

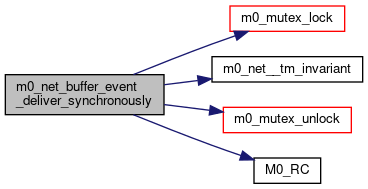

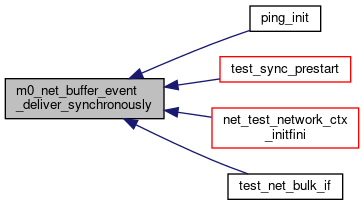

◆ m0_net_buffer_event_deliver_synchronously()

| M0_INTERNAL int m0_net_buffer_event_deliver_synchronously | ( | struct m0_net_transfer_mc * | tm | ) |

This subroutine disables the automatic delivery of network buffer events. Instead, the application should use the m0_net_buffer_event_pending() subroutine to check for the presence of events, and the m0_net_buffer_event_deliver_all() subroutine to cause pending events to be delivered. The m0_net_buffer_event_notify() subroutine can be used to get notified on a wait channel when buffer events arrive.

Support for this mode of operation is transport specific.

The subroutine must be invoked before the transfer machine is started.

- Precondition

- tm->ntm_bev_auto_deliver

- Postcondition

- !tm->ntm_bev_auto_deliver

- See also

- m0_net_buffer_event_pending(), m0_net_buffer_event_deliver_all(), m0_net_buffer_event_notify()

Definition at line 377 of file tm.c.

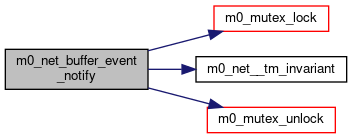

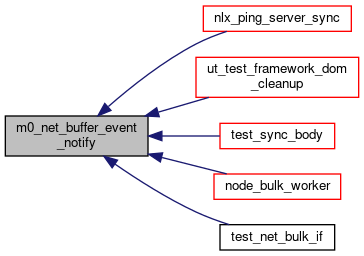

◆ m0_net_buffer_event_notify()

| M0_INTERNAL void m0_net_buffer_event_notify | ( | struct m0_net_transfer_mc * | tm, |

| struct m0_chan * | chan | ||

| ) |

This subroutine arranges for notification of the arrival of the next network buffer event to be signalled on the specified channel. Typically, this subroutine is called only when the the m0_net_buffer_event_pending() subroutine indicates that there are no events pending. The subroutine does not block the invoker.

- Note

- The subroutine exhibits "monoshot" behavior - it only signals once on the specified wait channel.

- Precondition

- !tm->ntm_bev_auto_deliver

Definition at line 423 of file tm.c.

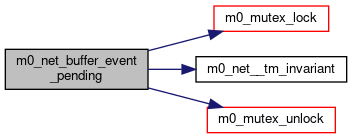

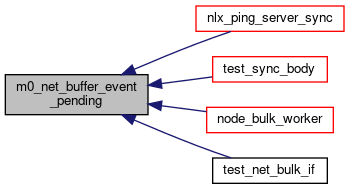

◆ m0_net_buffer_event_pending()

| M0_INTERNAL bool m0_net_buffer_event_pending | ( | struct m0_net_transfer_mc * | tm | ) |

This subroutine determines if there are pending network buffer events that can be delivered with the m0_net_buffer_event_deliver_all() subroutine.

- Precondition

- !tm->ntm_bev_auto_deliver

Definition at line 409 of file tm.c.

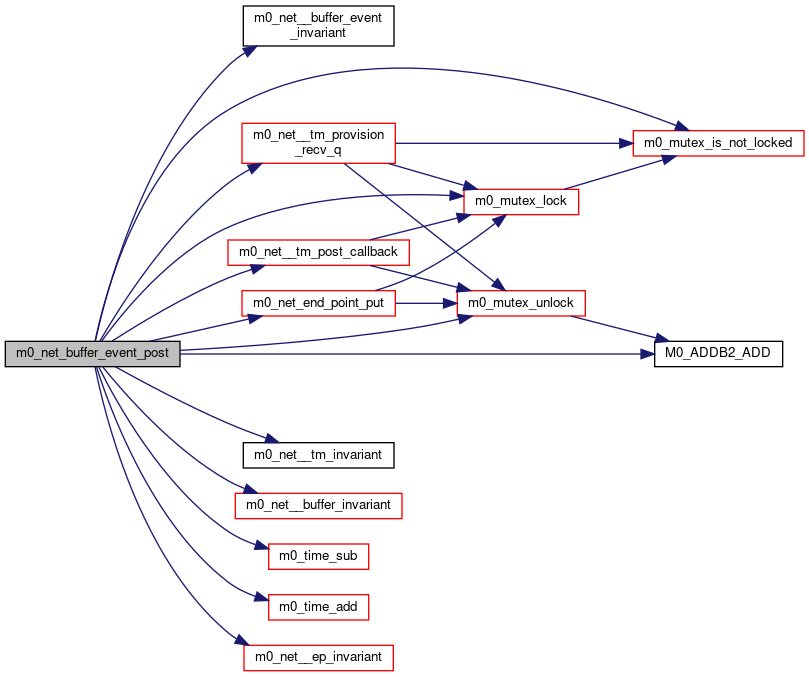

◆ m0_net_buffer_event_post()

| M0_INTERNAL void m0_net_buffer_event_post | ( | const struct m0_net_buffer_event * | ev | ) |

A transfer machine is notified of buffer related events with this subroutine.

Typically, the subroutine is invoked by the transport associated with the transfer machine.

The event data structure is not referenced from elsewhere after this subroutine returns, so may be allocated on the stack of the calling thread.

Multiple concurrent events may be delivered for a given buffer, depending upon the transport.

The subroutine will remove a buffer from its queue if the M0_NET_BUF_RETAIN flag is not set. It will clear the M0_NET_BUF_QUEUED and M0_NET_BUF_IN_USE flags and set the nb_timeout field to M0_TIME_NEVER if the buffer is dequeued. It will always clear the M0_NET_BUF_RETAIN, M0_NET_BUF_CANCELLED and M0_NET_BUF_TIMED_OUT flags prior to invoking the callback. The M0_NET_BUF_RETAIN flag must not be set if the status indicates error.

If the M0_NET_BUF_CANCELLED flag was set, then the status must be -ECANCELED.

If the M0_NET_BUF_TIMED_OUT flag was set, then the status must be -ETIMEDOUT.

The subroutine will perform a m0_end_point_put() on the ev->nbe_ep, if the queue type is M0_NET_QT_MSG_RECV and the nbe_status value is 0, and for the M0_NET_QT_MSG_SEND queue to match the m0_end_point_get() made in the m0_net_buffer_add() call. Care should be taken by the transport to accomodate these adjustments when invoking the subroutine with the M0_NET_BUF_RETAIN flag set.

The subroutine will also signal to all waiters on the m0_net_transfer_mc.ntm_chan field after delivery of the callback.

The invoking process should be aware that the callback subroutine could end up making re-entrant calls to the transport layer.

- Parameters

-

ev Event pointer. The nbe_buffer field identifies the buffer, and the buffer's nb_tm field identifies the associated transfer machine.

- See also

- m0_net_tm_event_post()

Definition at line 314 of file buf.c.

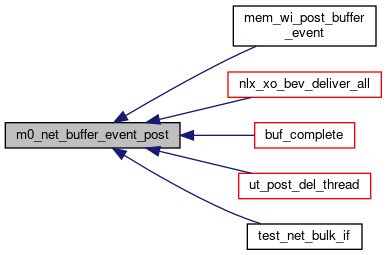

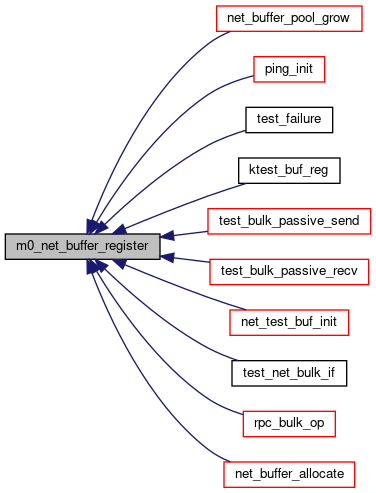

◆ m0_net_buffer_register()

| M0_INTERNAL int m0_net_buffer_register | ( | struct m0_net_buffer * | buf, |

| struct m0_net_domain * | dom | ||

| ) |

Registers a buffer with the domain. The domain could perform some optimizations under the covers.

- Parameters

-

buf Pointer to a buffer. The buffer should have the following fields initialized: - m0_net_buffer.nb_buffer should be initialized to point to the buffer memory regions. The buffer's timeout value is initialized to M0_TIME_NEVER upon return.

- Precondition

- buf->nb_flags == 0

- buf->nb_buffer.ov_buf != NULL

- m0_vec_count(&buf->nb_buffer.ov_vec) > 0

- Postcondition

- ergo(result == 0, buf->nb_flags & M0_NET_BUF_REGISTERED)

- ergo(result == 0, buf->nb_timeout == M0_TIME_NEVER)

- Parameters

-

dom Pointer to the domain.

Definition at line 65 of file buf.c.

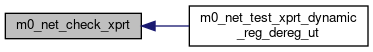

◆ m0_net_check_xprt()

| M0_INTERNAL bool m0_net_check_xprt | ( | const struct m0_net_xprt * | xprt | ) |

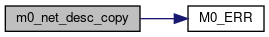

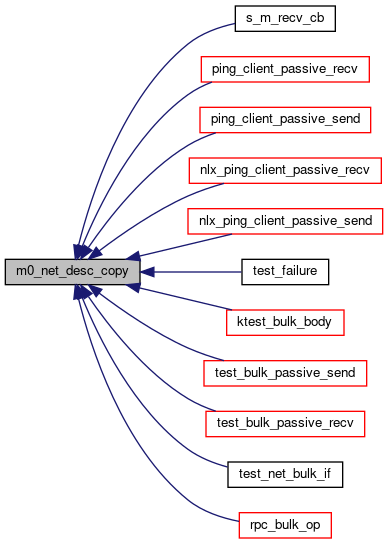

◆ m0_net_desc_copy()

| M0_INTERNAL int m0_net_desc_copy | ( | const struct m0_net_buf_desc * | from_desc, |

| struct m0_net_buf_desc * | to_desc | ||

| ) |

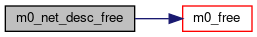

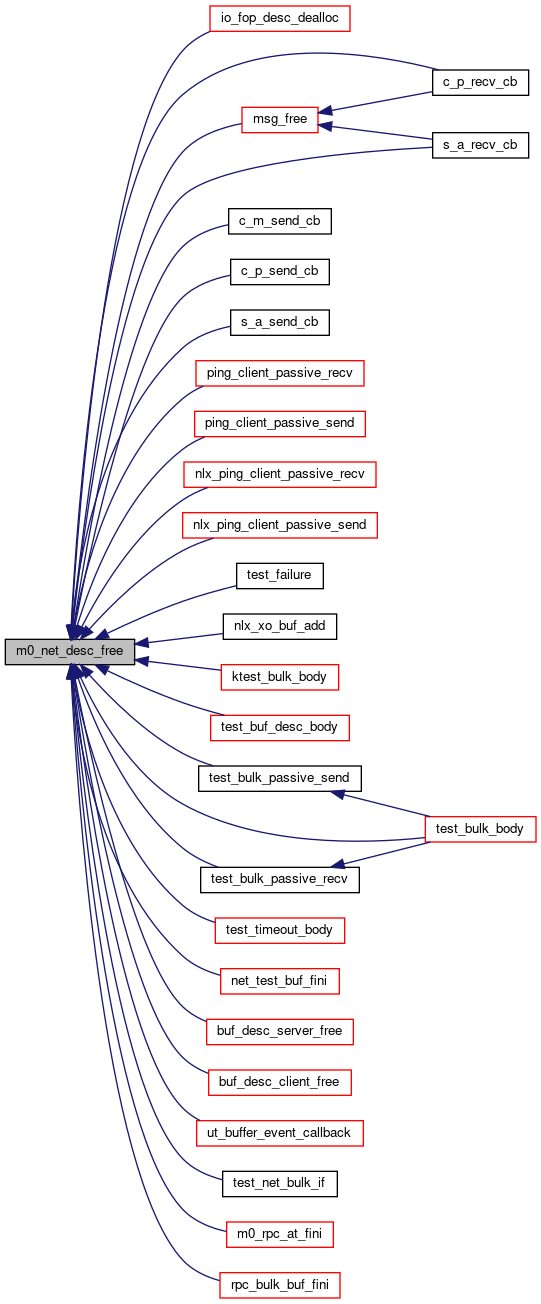

◆ m0_net_desc_free()

| M0_INTERNAL void m0_net_desc_free | ( | struct m0_net_buf_desc * | desc | ) |

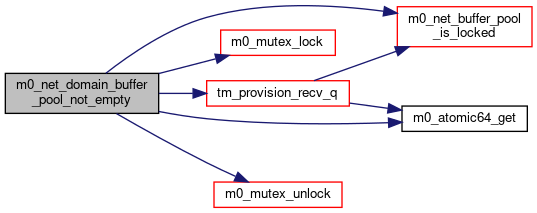

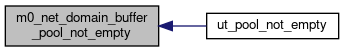

◆ m0_net_domain_buffer_pool_not_empty()

| M0_INTERNAL void m0_net_domain_buffer_pool_not_empty | ( | struct m0_net_buffer_pool * | pool | ) |

Reprovisions all transfer machines in the network domain of this buffer pool, that are associated with the pool.

The application typically arranges for this subroutine to be called from the pool's not-empty callback operation.

The subroutine should be invoked while holding the pool lock, which is normally the case in the pool not-empty callback, m0_net_buffer_pool_ops::nbpo_not_empty().

- Parameters

-

pool A network buffer pool.

- See also

- m0_net_tm_pool_attach()

Definition at line 484 of file tm_provision.c.

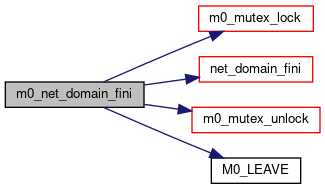

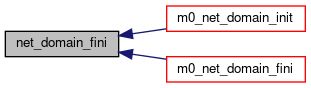

◆ m0_net_domain_fini()

| void m0_net_domain_fini | ( | struct m0_net_domain * | dom | ) |

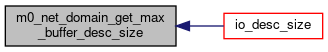

◆ m0_net_domain_get_max_buffer_desc_size()

| M0_INTERNAL m0_bcount_t m0_net_domain_get_max_buffer_desc_size | ( | struct m0_net_domain * | dom | ) |

Returns the size of m0_net_buf_desc for a given net domain.

- Return values

-

size Size of m0_net_buf_desc for the transport associated with given net domain.

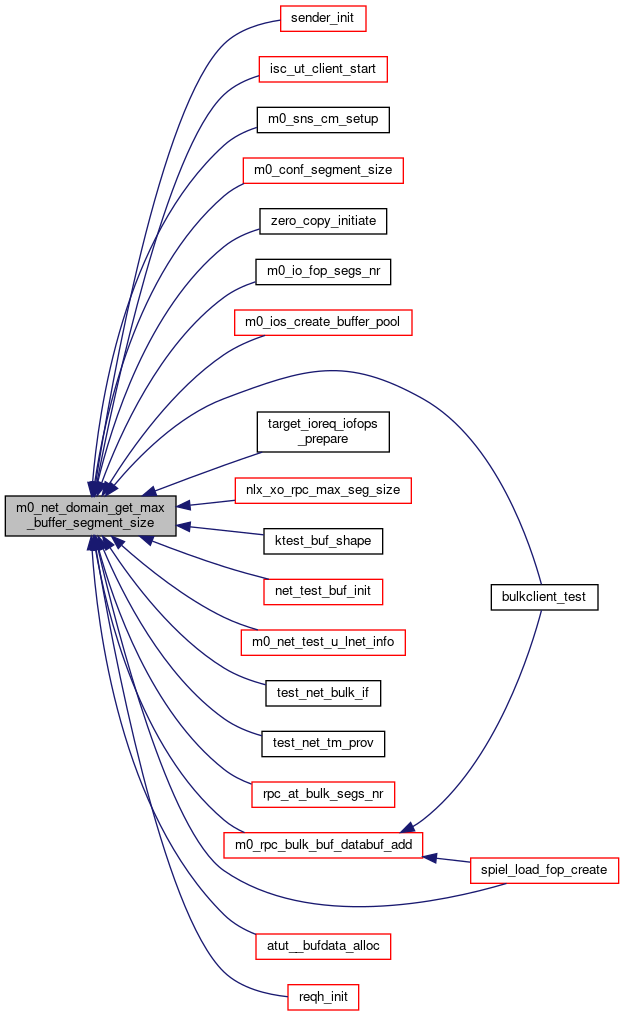

◆ m0_net_domain_get_max_buffer_segment_size()

| M0_INTERNAL m0_bcount_t m0_net_domain_get_max_buffer_segment_size | ( | struct m0_net_domain * | dom | ) |

Returns the maximum buffer segment size allowed for the domain.

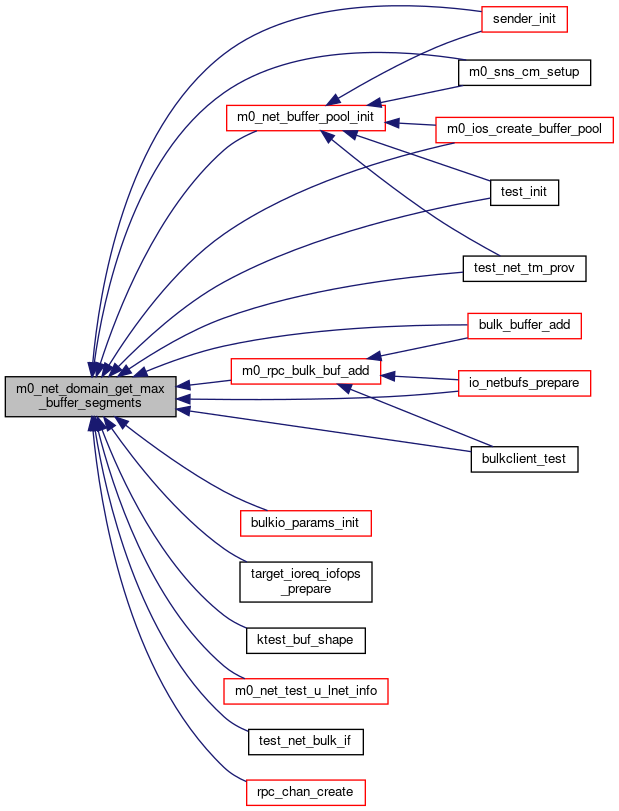

◆ m0_net_domain_get_max_buffer_segments()

| M0_INTERNAL int32_t m0_net_domain_get_max_buffer_segments | ( | struct m0_net_domain * | dom | ) |

Returns the maximum number of buffer segments for the domain.

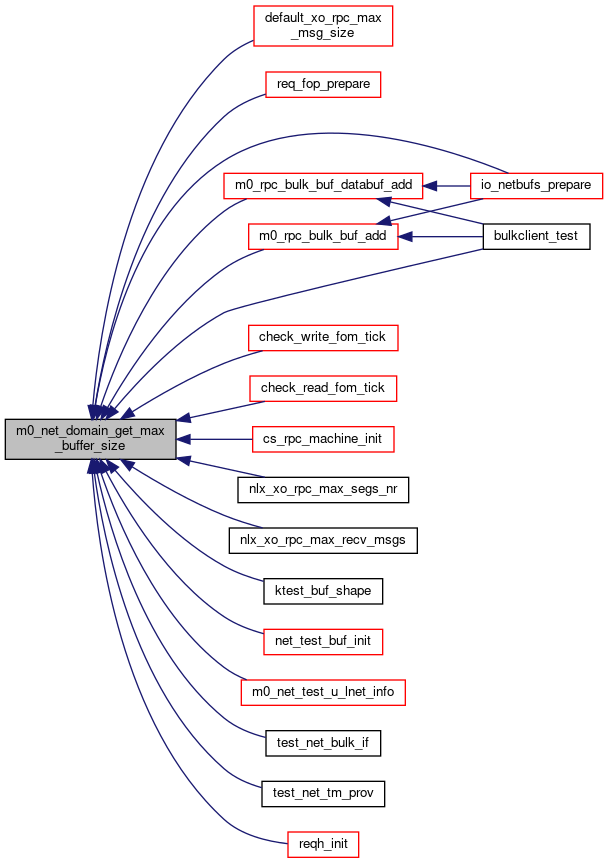

◆ m0_net_domain_get_max_buffer_size()

| M0_INTERNAL m0_bcount_t m0_net_domain_get_max_buffer_size | ( | struct m0_net_domain * | dom | ) |

Returns the the maximum buffer size allowed for the domain. This includes all segments.

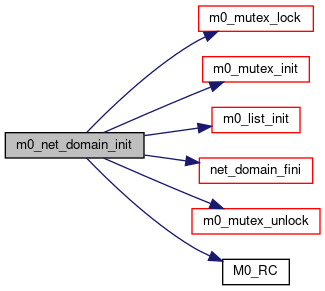

◆ m0_net_domain_init()

| int m0_net_domain_init | ( | struct m0_net_domain * | dom, |

| const struct m0_net_xprt * | xprt | ||

| ) |

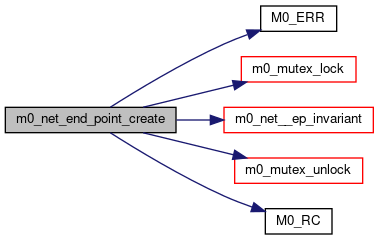

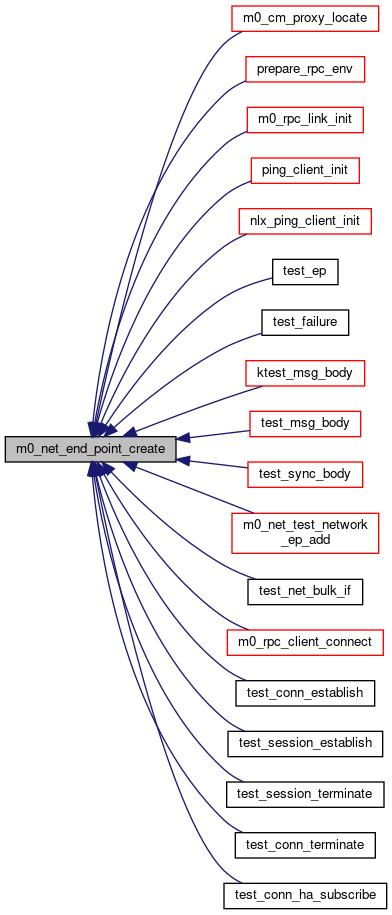

◆ m0_net_end_point_create()

| M0_INTERNAL int m0_net_end_point_create | ( | struct m0_net_end_point ** | epp, |

| struct m0_net_transfer_mc * | tm, | ||

| const char * | addr | ||

| ) |

Allocates an end point data structure representing the desired end point and sets its reference count to 1, or increments the reference count of an existing matching data structure. The data structure is linked to the transfer machine. The invoker should call the m0_net_end_point_put() when the data structure is no longer needed.

- Parameters

-

epp Pointer to a pointer to the data structure which will be set upon return. The reference count of the returned data structure will be at least 1. tm Transfer machine pointer. The transfer machine must be in the started state. addr String describing the end point address in a transport specific manner. The format of this address string is the same as the printable representation form stored in the end point nep_addr field. It is optional, and if NULL, the transport may support assignment of an end point with a dynamic address; however this is not guaranteed. The address string, if specified, is not referenced again after return from this subroutine (i.e., the allocated end point gets the copy).

- Precondition

- tm->ntm_state == M0_NET_TM_STARTED

- Postcondition

- (*epp)->nep_ref->ref_cnt >= 1 && (*epp)->nep_addr != NULL && (*epp)->nep_tm == tm

Definition at line 56 of file ep.c.

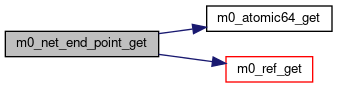

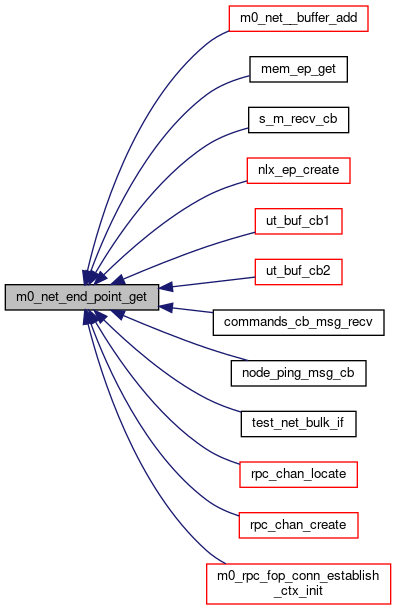

◆ m0_net_end_point_get()

| M0_INTERNAL void m0_net_end_point_get | ( | struct m0_net_end_point * | ep | ) |

Increments the reference count of an end point data structure. This is used to safely point to the structure in a different context - when done, the reference count should be decremented by a call to m0_net_end_point_put().

- Precondition

- ep->nep_ref->ref_cnt > 0

Definition at line 88 of file ep.c.

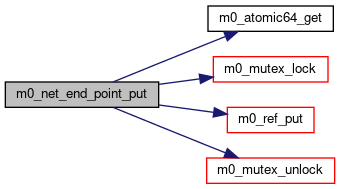

◆ m0_net_end_point_put()

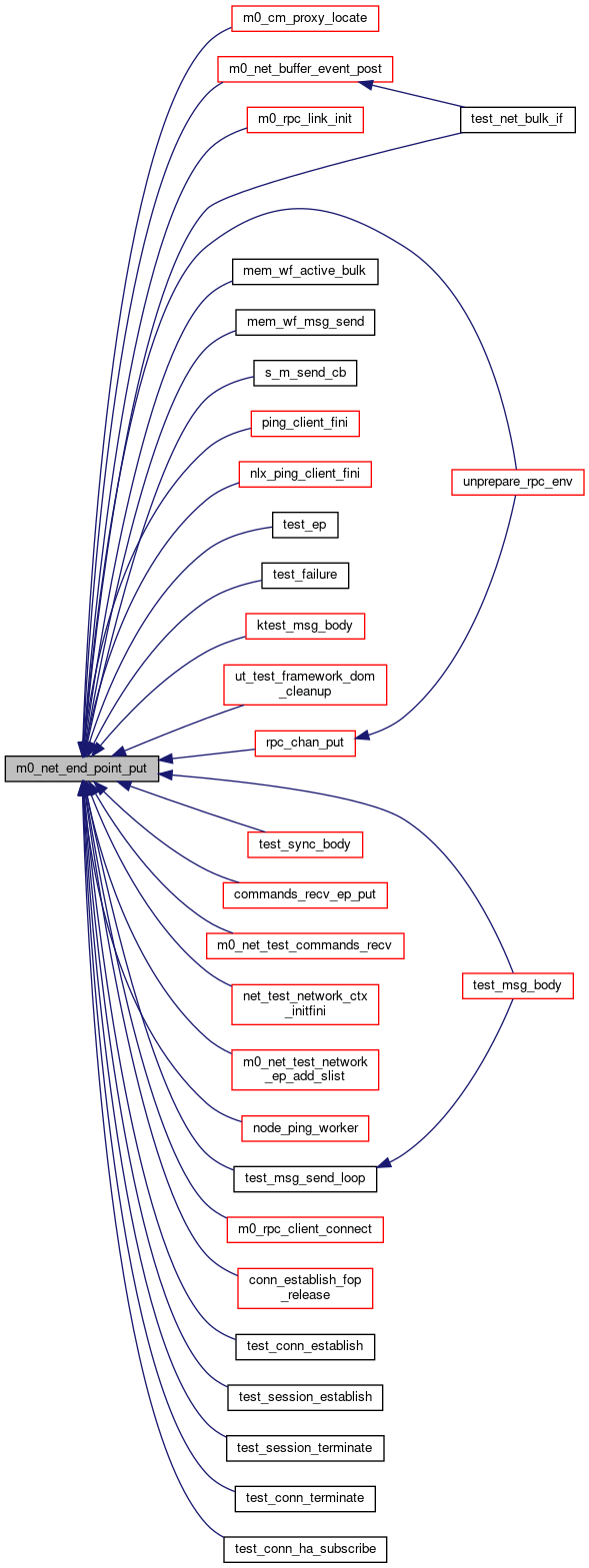

| void m0_net_end_point_put | ( | struct m0_net_end_point * | ep | ) |

Decrements the reference count of an end point data structure. The structure will be released when the count goes to 0.

- Parameters

-

ep End point data structure pointer. Do not dereference this pointer after this call.

- Precondition

- ep->nep_ref->ref_cnt >= 1

- Note

- The transfer machine mutex will be obtained internally to synchronize the transport provided release() method in case the end point gets released.

Definition at line 98 of file ep.c.

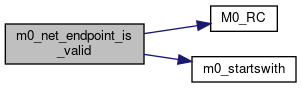

◆ m0_net_endpoint_is_valid()

| M0_INTERNAL bool m0_net_endpoint_is_valid | ( | const char * | endpoint | ) |

Checks whether endpoint address is properly formatted.

Endpoint address format (ABNF):

endpoint = nid ":12345:" DIGIT+ ":" DIGIT+

; <network id>:<process id>:<portal number>:<transfer machine id>

;

nid = "0@lo" / (ipv4addr "@" ("tcp" / "o2ib") [DIGIT])

ipv4addr = 1*3DIGIT "." 1*3DIGIT "." 1*3DIGIT "." 1*3DIGIT ; 0..255

Definition at line 99 of file net.c.

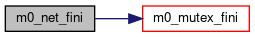

◆ m0_net_fini()

| M0_INTERNAL void m0_net_fini | ( | void | ) |

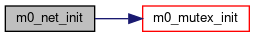

◆ m0_net_init()

| M0_INTERNAL int m0_net_init | ( | void | ) |

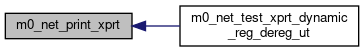

◆ m0_net_print_xprt()

| M0_INTERNAL void m0_net_print_xprt | ( | void | ) |

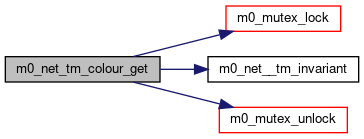

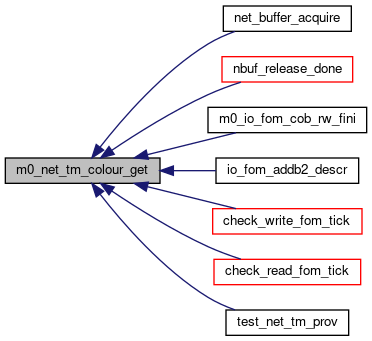

◆ m0_net_tm_colour_get()

| M0_INTERNAL uint32_t m0_net_tm_colour_get | ( | struct m0_net_transfer_mc * | tm | ) |

Returns the buffer pool color associated with a transfer machine.

- See also

- m0_net_tm_colour_set()

Definition at line 448 of file tm.c.

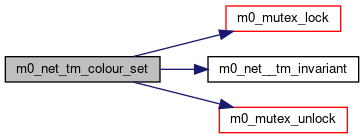

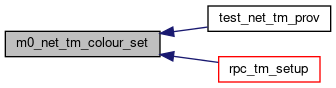

◆ m0_net_tm_colour_set()

| M0_INTERNAL void m0_net_tm_colour_set | ( | struct m0_net_transfer_mc * | tm, |

| uint32_t | colour | ||

| ) |

Associates a buffer pool color with a transfer machine. This helps establish an association between a network buffer and the transfer machine when provisioning from a buffer pool, which can considerably improve the spatial and temporal locality of future provisioning calls from the buffer pool.

Automatically provisioned receive queue network buffers will be allocated with the specified color. The application can also use this color when provisioning buffers for this transfer machine in other network buffer pool use cases.

If this function is not called, the transfer machine's color is initialized to ~0.

- Precondition

- M0_IN(tm->ntm_state, (M0_NET_TM_INITIALIZED, M0_NET_TM_STARTED))

Definition at line 436 of file tm.c.

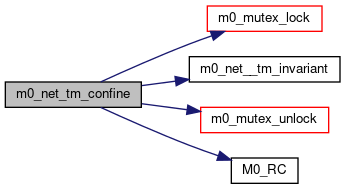

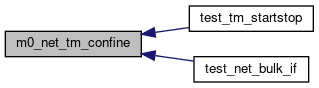

◆ m0_net_tm_confine()

| M0_INTERNAL int m0_net_tm_confine | ( | struct m0_net_transfer_mc * | tm, |

| const struct m0_bitmap * | processors | ||

| ) |

Sets the processor affinity of the threads of a transfer machine. The transfer machine must be initialized but not yet started.

Support for this operation is transport specific.

- Parameters

-

processors Processor bitmap. The bit map is not referenced internally after the subroutine returns.

- Return values

-

-ENOSYS No affinity support available in the transport.

- Precondition

- tm->ntm_state == M0_NET_TM_INITIALIZED

- See also

- Processor API

- Bitmap API

Definition at line 356 of file tm.c.

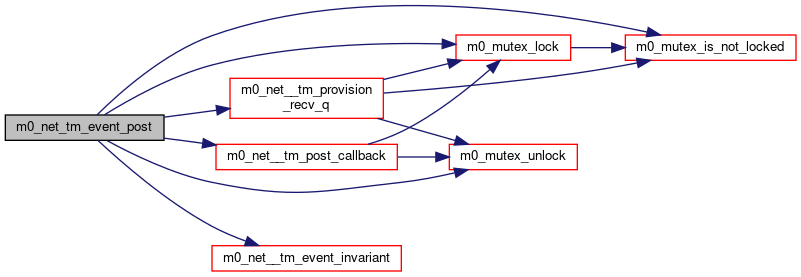

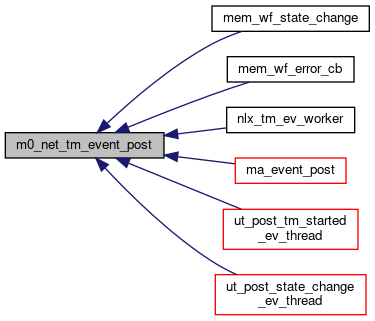

◆ m0_net_tm_event_post()

| M0_INTERNAL void m0_net_tm_event_post | ( | const struct m0_net_tm_event * | ev | ) |

A transfer machine is notified of non-buffer related events of interest with this subroutine. Typically, the subroutine is invoked by the transport associated with the transfer machine.

The event data structure is not referenced from elsewhere after this subroutine returns, so may be allocated on the stack of the calling thread.

Multiple concurrent events may be delivered for a given transfer machine.

The subroutine will also signal to all waiters on the m0_net_transfer_mc::ntm_chan field after delivery of the callback.

The invoking process should be aware that the callback subroutine could end up making re-entrant calls to the transport layer.

- Parameters

-

ev Event pointer. The m0_net_tm_event::nte_tm field identifies the transfer machine.

- See also

- m0_net_tm_buffer_post()

Definition at line 84 of file tm.c.

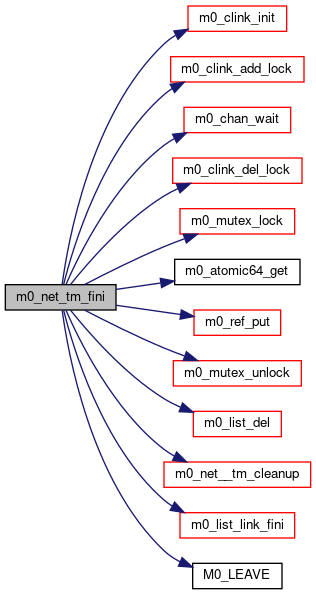

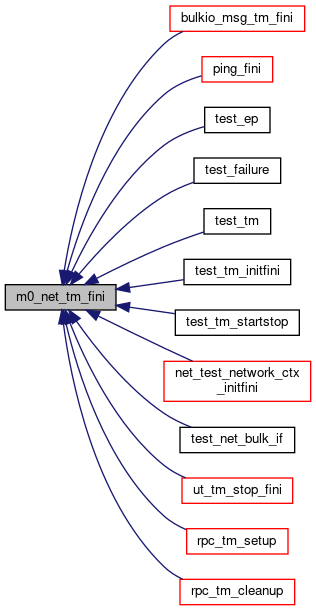

◆ m0_net_tm_fini()

| M0_INTERNAL void m0_net_tm_fini | ( | struct m0_net_transfer_mc * | tm | ) |

Finalizes a transfer machine, releasing any associated transport specific resources.

All application references to end points associated with this transfer machine should be released prior to this call.

- Precondition

- M0_IN(tm->ntm_state, (M0_NET_TM_STOPPED, M0_NET_TM_FAILED, M0_NET_TM_INITIALIZED)) && ((m0_nep_tlist_is_empty(&tm->ntm_end_points) && tm->ntm_ep == NULL) || (m0_nep_tlist_length(&tm->ntm_end_points) == 1 && m0_nep_tlist_contains(&tm->ntm_end_points, tm->ntm_ep) && m0_atomic64_get(tm->ntm_ep->nep_ref.ref_cnt) == 1))

Definition at line 204 of file tm.c.

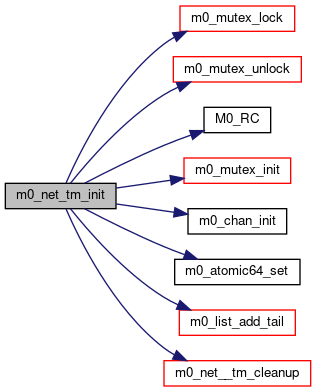

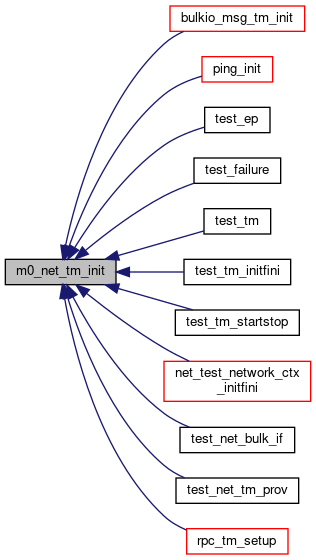

◆ m0_net_tm_init()

| M0_INTERNAL int m0_net_tm_init | ( | struct m0_net_transfer_mc * | tm, |

| struct m0_net_domain * | dom | ||

| ) |

Initializes a transfer machine.

Prior to invocation the following fields should be set:

- m0_net_transfer_mc.ntm_state should be set to M0_NET_TM_UNDEFINED. Zeroing the entire structure has the side effect of setting this value.

- m0_net_transfer_mc.ntm_callbacks should point to a properly initialized struct m0_net_tm_callbacks data structure.

All fields in the structure other then the above will be set to their appropriate initial values.

- Parameters

-

dom Network domain pointer.

- Postcondition

- tm->ntm_bev_auto_deliver is set.

- (tm->ntm_pool_colour == M0_NET_BUFFER_POOL_ANY_COLOR && tm->ntm_recv_pool_queue_min_length == M0_NET_TM_RECV_QUEUE_DEF_LEN)

Definition at line 160 of file tm.c.

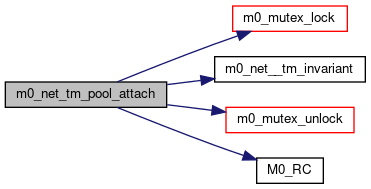

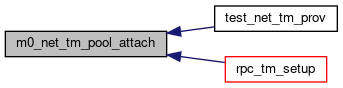

◆ m0_net_tm_pool_attach()

| M0_INTERNAL int m0_net_tm_pool_attach | ( | struct m0_net_transfer_mc * | tm, |

| struct m0_net_buffer_pool * | bufpool, | ||

| const struct m0_net_buffer_callbacks * | callbacks, | ||

| m0_bcount_t | min_recv_size, | ||

| uint32_t | max_recv_msgs, | ||

| uint32_t | min_recv_queue_len | ||

| ) |

Enables the automatic provisioning of network buffers to the receive queue of the transfer machine from the specified network buffer pool.

Provisioning takes place at the following times:

- Upon transfer machine startup

- Prior to delivery of a de-queueud receive message buffer

- When buffers are returned to an exhausted network buffer pool and there are transfer machines that can be re-provisioned from that pool. This requires that the application invoke the m0_net_domain_buffer_pool_not_empty() subroutine from the pool's not-empty callback.

- When the minimum length of the receive buffer queue is modified.

- Parameters

-

bufpool Pointer to a network buffer pool. callbacks Pointer to the callbacks to be set in the provisioned network buffer. min_recv_size Minimum remaining size in a buffer in TM receive queue to allow reuse for multiple messages. max_recv_msgs Maximum number of messages that may be received in the buffer in TM receive queue. min_recv_queue_len Minimum nuber of buffers in TM receive queue.

- Precondition

- tm != NULL

- tm->ntm_state == M0_NET_TM_INITIALIZED

- bufpool != NULL

- callbacks != NULL

- callbacks->nbc_cb[M0_NET_QT_MSG_RECV] != NULL

- min_recv_size > 0

- max_recv_msgs > 0

Definition at line 459 of file tm.c.

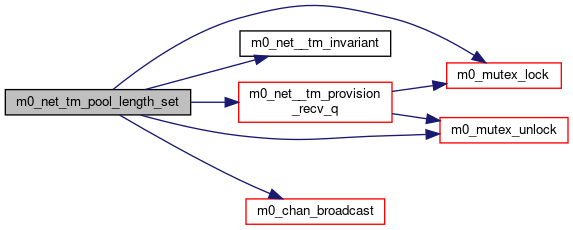

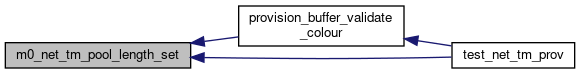

◆ m0_net_tm_pool_length_set()

| M0_INTERNAL void m0_net_tm_pool_length_set | ( | struct m0_net_transfer_mc * | tm, |

| uint32_t | len | ||

| ) |

Sets the minimum number of network buffers that should be present on the receive queue of the transfer machine. If the number falls below this value and automatic provisioning is enabled, then additional buffers are provisioned as needed. Invoking this subroutine may trigger provisioning.

- Parameters

-

len Minimum receive queue length. The default value is M0_NET_TM_RECV_QUEUE_DEF_LEN.

- See also

- m0_net_tm_pool_attach()

Definition at line 490 of file tm.c.

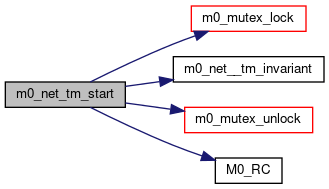

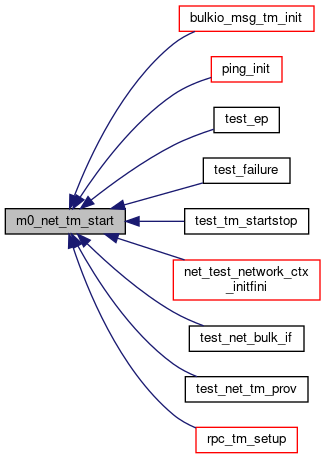

◆ m0_net_tm_start()

| M0_INTERNAL int m0_net_tm_start | ( | struct m0_net_transfer_mc * | tm, |

| const char * | addr | ||

| ) |

Starts a transfer machine.

The subroutine does not block the invoker. Instead the state is immediately changed to M0_NET_TM_STARTING, and an event will be posted to indicate when the transfer machine has transitioned to the M0_NET_TM_STARTED state.

- Note

- It is possible that the state change event be posted before this subroutine returns. It is guaranteed that the event will be posted on a different thread.

- Parameters

-

tm Transfer machine pointer. addr End point address to associate with the transfer machine. May be null if dynamic addressing is supported by the transport. The end point is created internally and made visible by the ntm_ep field only if the start operation succeeds.

- Precondition

- tm->ntm_state == M0_NET_TM_INITIALIZED

- See also

- m0_net_end_point_create()

Definition at line 261 of file tm.c.

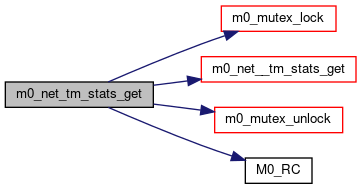

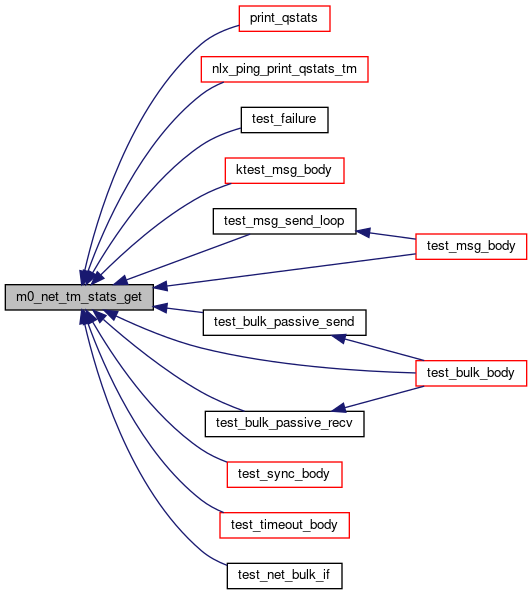

◆ m0_net_tm_stats_get()

| M0_INTERNAL int m0_net_tm_stats_get | ( | struct m0_net_transfer_mc * | tm, |

| enum m0_net_queue_type | qtype, | ||

| struct m0_net_qstats * | qs, | ||

| bool | reset | ||

| ) |

Retrieves transfer machine statistics for all or for a single logical queue, optionally resetting the data. The operation is performed atomically with respect to on-going transfer machine activity.

- Parameters

-

qtype Logical queue identifier of the queue concerned. Specify M0_NET_QT_NR instead if all the queues are to be considered. qs Returned statistical data. May be NULL if only a reset operation is desired. Otherwise should point to a single m0_net_qstats data structure if the value of qtype is not M0_NET_QT_NR, or else should point to an array of M0_NET_QT_NR such structures in which to return the statistical data on all the queues. reset Ttrue iff the associated statistics data should be reset at the same time.

- Precondition

- tm->ntm_state >= M0_NET_TM_INITIALIZED

Definition at line 343 of file tm.c.

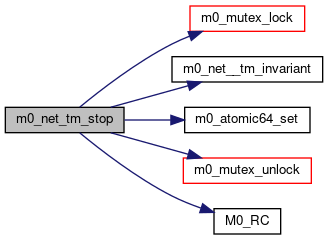

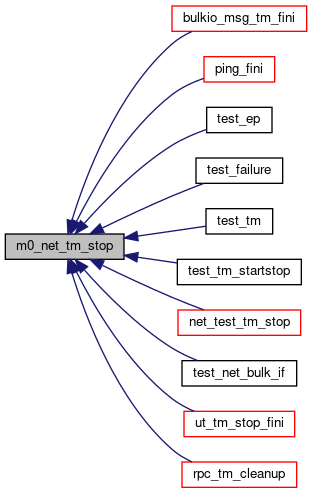

◆ m0_net_tm_stop()

| M0_INTERNAL int m0_net_tm_stop | ( | struct m0_net_transfer_mc * | tm, |

| bool | abort | ||

| ) |

Initiates the shutdown of a transfer machine. New messages will not be accepted and new end points cannot be created. Pending operations will be completed or aborted as desired.

All end point references must be released by the application prior to invocation. The only end point reference that may exist is that of this transfer machine itself, and that will be released during fini.

The subroutine does not block the invoker. Instead the state is immediately changed to M0_NET_TM_STOPPING, and an event will be posted to indicate when the transfer machine has transitioned to the M0_NET_TM_STOPPED state.

- Note

- It is possible that the state change event be posted before this subroutine returns. It is guaranteed that the event will be posted on a different thread.

- Precondition

- M0_IN(tm->ntm_state, (M0_NET_TM_INITIALIZED, M0_NET_TM_STARTING, M0_NET_TM_STARTED))

- Parameters

-

abort Cancel pending operations. Support for this option is transport specific.

Definition at line 293 of file tm.c.

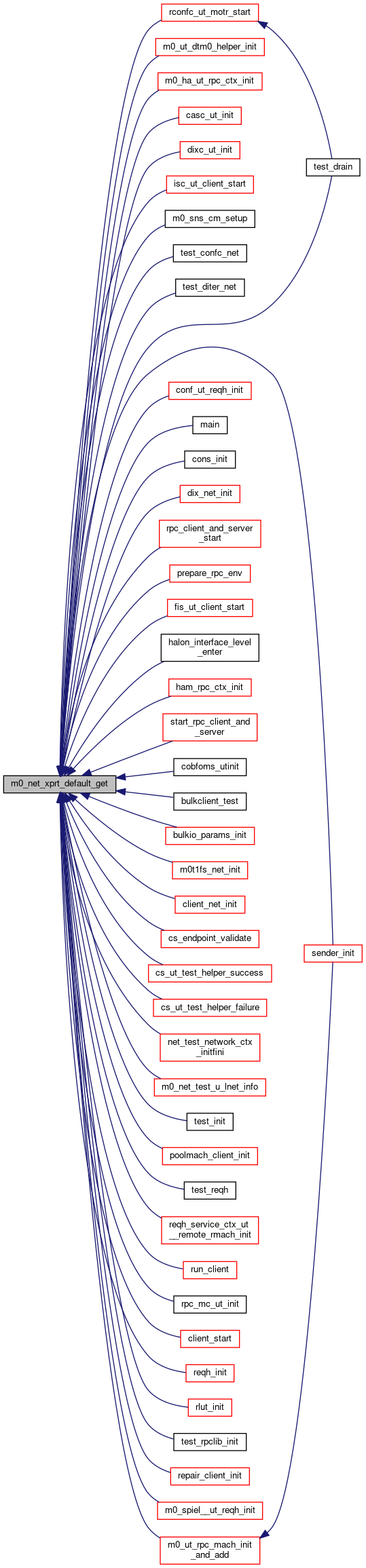

◆ m0_net_xprt_default_get()

| struct m0_net_xprt* m0_net_xprt_default_get | ( | void | ) |

◆ m0_net_xprt_default_set()

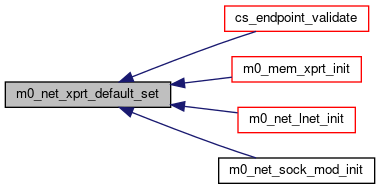

| M0_INTERNAL void m0_net_xprt_default_set | ( | const struct m0_net_xprt * | xprt | ) |

◆ m0_net_xprt_deregister()

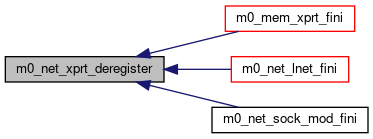

| M0_INTERNAL void m0_net_xprt_deregister | ( | const struct m0_net_xprt * | xprt | ) |

◆ m0_net_xprt_nr()

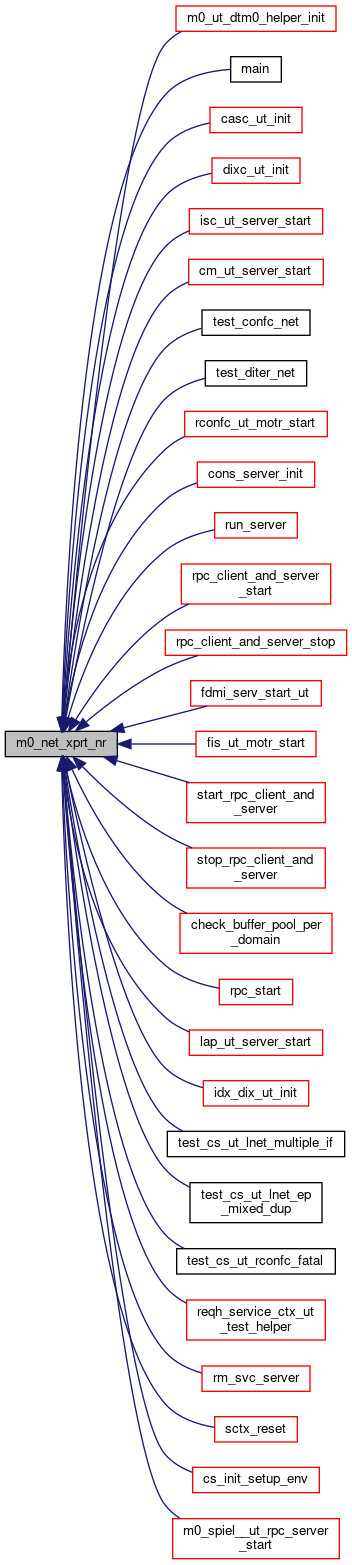

| int m0_net_xprt_nr | ( | void | ) |

◆ m0_net_xprt_register()

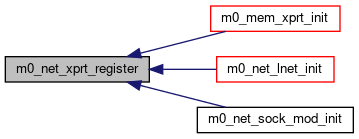

| M0_INTERNAL void m0_net_xprt_register | ( | const struct m0_net_xprt * | xprt | ) |

◆ M0_TL_DECLARE() [1/3]

| M0_TL_DECLARE | ( | m0_nep | , |

| M0_INTERNAL | , | ||

| struct m0_net_end_point | |||

| ) |

◆ M0_TL_DECLARE() [2/3]

| M0_TL_DECLARE | ( | m0_net_pool | , |

| M0_INTERNAL | , | ||

| struct m0_net_buffer | |||

| ) |

◆ M0_TL_DECLARE() [3/3]

| M0_TL_DECLARE | ( | m0_net_tm | , |

| M0_INTERNAL | , | ||

| struct m0_net_buffer | |||

| ) |

◆ M0_TL_DEFINE() [1/2]

| M0_TL_DEFINE | ( | m0_nep | , |

| M0_INTERNAL | , | ||

| struct m0_net_end_point | |||

| ) |

◆ M0_TL_DEFINE() [2/2]

| M0_TL_DEFINE | ( | m0_net_tm | , |

| M0_INTERNAL | , | ||

| struct m0_net_buffer | |||

| ) |

◆ M0_TL_DESCR_DECLARE() [1/3]

| M0_TL_DESCR_DECLARE | ( | m0_nep | , |

| M0_EXTERN | |||

| ) |

Endpoint lists. Used for the transfer machine ntm_end_points list.

◆ M0_TL_DESCR_DECLARE() [2/3]

| M0_TL_DESCR_DECLARE | ( | m0_net_pool | , |

| M0_EXTERN | |||

| ) |

◆ M0_TL_DESCR_DECLARE() [3/3]

| M0_TL_DESCR_DECLARE | ( | m0_net_tm | , |

| M0_EXTERN | |||

| ) |

◆ M0_TL_DESCR_DEFINE() [1/2]

| M0_TL_DESCR_DEFINE | ( | m0_nep | , |

| "net end points" | , | ||

| M0_INTERNAL | , | ||

| struct m0_net_end_point | , | ||

| nep_tm_linkage | , | ||

| nep_magix | , | ||

| M0_NET_NEP_MAGIC | , | ||

| M0_NET_NEP_HEAD_MAGIC | |||

| ) |

◆ M0_TL_DESCR_DEFINE() [2/2]

| M0_TL_DESCR_DEFINE | ( | m0_net_tm | , |

| "tm list" | , | ||

| M0_INTERNAL | , | ||

| struct m0_net_buffer | , | ||

| nb_tm_linkage | , | ||

| nb_magic | , | ||

| M0_NET_BUFFER_LINK_MAGIC | , | ||

| M0_NET_BUFFER_HEAD_MAGIC | |||

| ) |

◆ M0_XCA_DOMAIN()

| struct m0_net_buf_desc M0_XCA_DOMAIN | ( | rpc | ) |

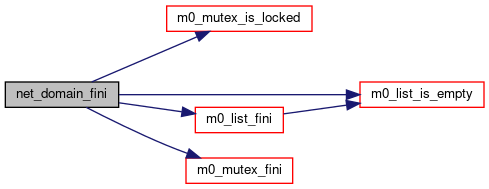

◆ net_domain_fini()

|

static |

Variable Documentation

◆ m0_net_module_type

| const struct m0_module_type m0_net_module_type |

◆ m0_net_mutex

| struct m0_mutex m0_net_mutex |

Network module global mutex. This mutex is used to serialize domain init and fini. It is defined here so that it can get initialized and fini'd by the general initialization mechanism. Transport that deal with multiple domains can rely on this mutex being held across their xo_dom_init() and xo_dom_fini() methods.