Atomic operations on 64bit quantities.

Implementation of these is platform-specific.

Implementation of atomic operations for Linux user space uses x86_64 assembly language instructions (with gcc syntax). "Lock" prefix is used everywhere—no optimisation for non-SMP configurations in present.

Implementation of atomic operations for Linux user space; uses gcc built-ins __sync_fetch* functions

http://gcc.gnu.org/onlinedocs/gcc-4.5.4/gcc/Atomic-Builtins.html

We did a benchmark of these function against the assembly based functions in lib/user_space/user_x86_64_atomic.h and we came to know that these are a bit slower than their assembly counterparts. So these are kept off by default (a configure option –enable-sync_atomic will enable them.)

With assembly

utils/ub 10

set: atomic-ub bench: [ iter] min max avg std sec/op op/sec atomic: [ 1000] 73.68 83.15 79.57 3.40% 7.957e-02/1.257e+01

with gcc built-ins

utils/ub 10

set: atomic-ub bench: [ iter] min max avg std sec/op op/sec atomic: [ 1000] 74.35 95.29 81.38 6.37% 8.138e-02/1.229e+01

Implementation of atomic operations for Linux user space uses aarch64 assembly language instructions (with gcc syntax). The aarch64 uses its own set of atomic assembely instruction to ensure atomicity —no optimisation for non-SMP configurations in present.

Implementation of atomic operations for Linux user space uses x86_64 assembly language instructions (with gcc syntax). "Lock" prefix is used everywhere to ensure atomicity —no optimisation for non-SMP configurations in present.

◆ M0_ATOMIC64_CAS

| #define M0_ATOMIC64_CAS |

( |

|

loc, |

|

|

|

oldval, |

|

|

|

newval |

|

) |

| |

Value:({ \

M0_CASSERT(__builtin_types_compatible_p(typeof(*(loc)), typeof(oldval))); \

M0_CASSERT(__builtin_types_compatible_p(typeof(oldval), typeof(newval))); \

m0_atomic64_cas_ptr((void **)(loc), oldval, newval); \

})

Definition at line 136 of file atomic.h.

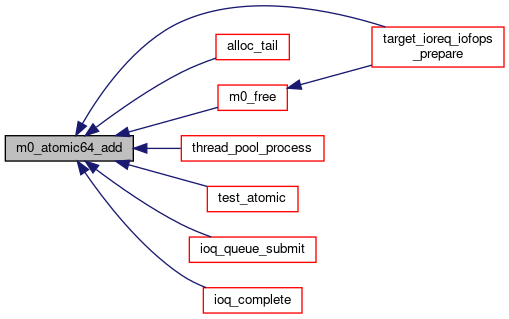

◆ m0_atomic64_add()

| static void m0_atomic64_add |

( |

struct m0_atomic64 * |

a, |

|

|

int64_t |

num |

|

) |

| |

|

inlinestatic |

Atomically adds given amount to a counter.

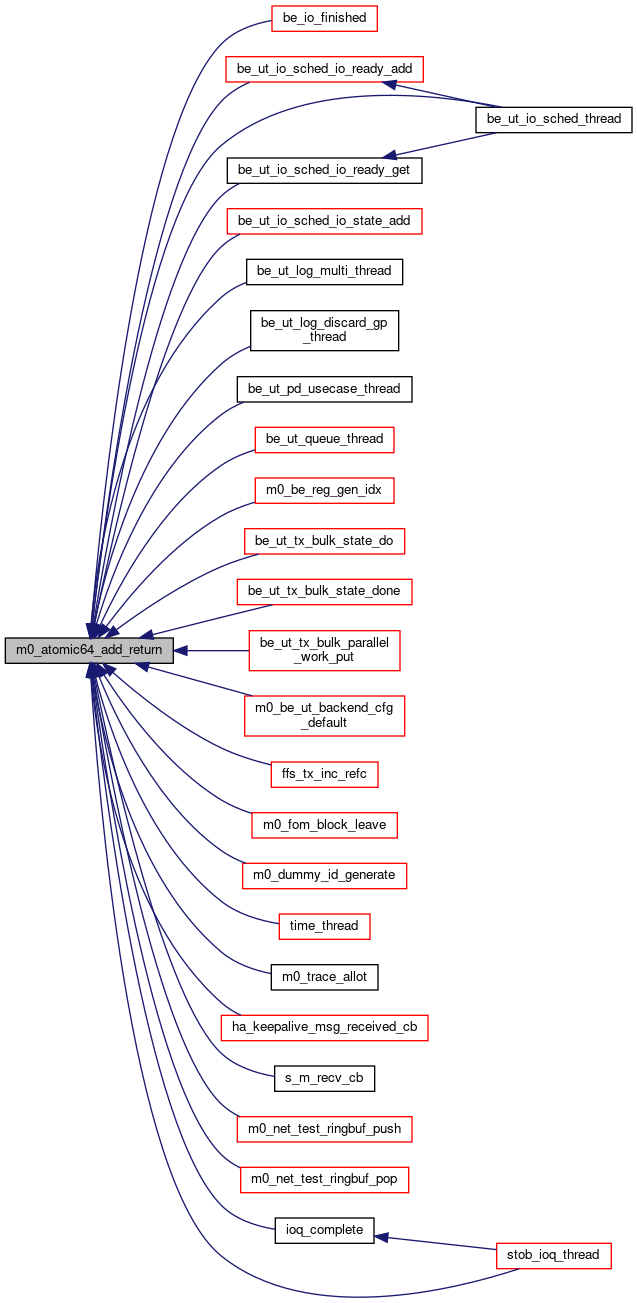

◆ m0_atomic64_add_return()

| static int64_t m0_atomic64_add_return |

( |

struct m0_atomic64 * |

a, |

|

|

int64_t |

d |

|

) |

| |

|

inlinestatic |

Atomically increments a counter and returns the result.

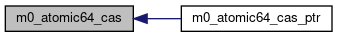

◆ m0_atomic64_cas()

| static bool m0_atomic64_cas |

( |

int64_t * |

loc, |

|

|

int64_t |

oldval, |

|

|

int64_t |

newval |

|

) |

| |

|

inlinestatic |

Atomic compare-and-swap: compares value stored in with and, if equal, replaces it with , all atomic w.r.t. concurrent accesses to .

Returns true iff new value was installed.

◆ m0_atomic64_cas_ptr()

| static bool m0_atomic64_cas_ptr |

( |

void ** |

loc, |

|

|

void * |

oldval, |

|

|

void * |

newval |

|

) |

| |

|

inlinestatic |

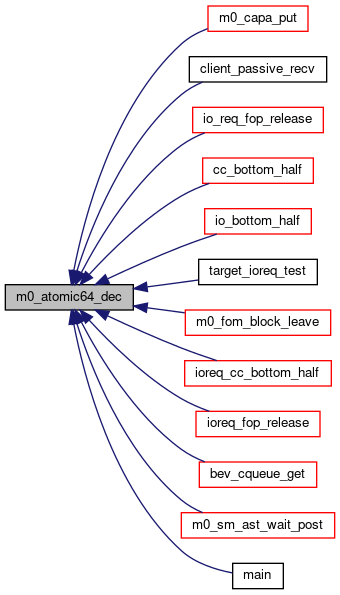

◆ m0_atomic64_dec()

Atomically decrements a counter.

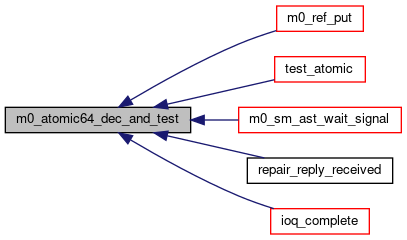

◆ m0_atomic64_dec_and_test()

| static bool m0_atomic64_dec_and_test |

( |

struct m0_atomic64 * |

a | ) |

|

|

inlinestatic |

Atomically decrements a counter and returns true iff the result is 0.

◆ m0_atomic64_get()

| static int64_t m0_atomic64_get |

( |

const struct m0_atomic64 * |

a | ) |

|

|

inlinestatic |

Returns value of an atomic counter.

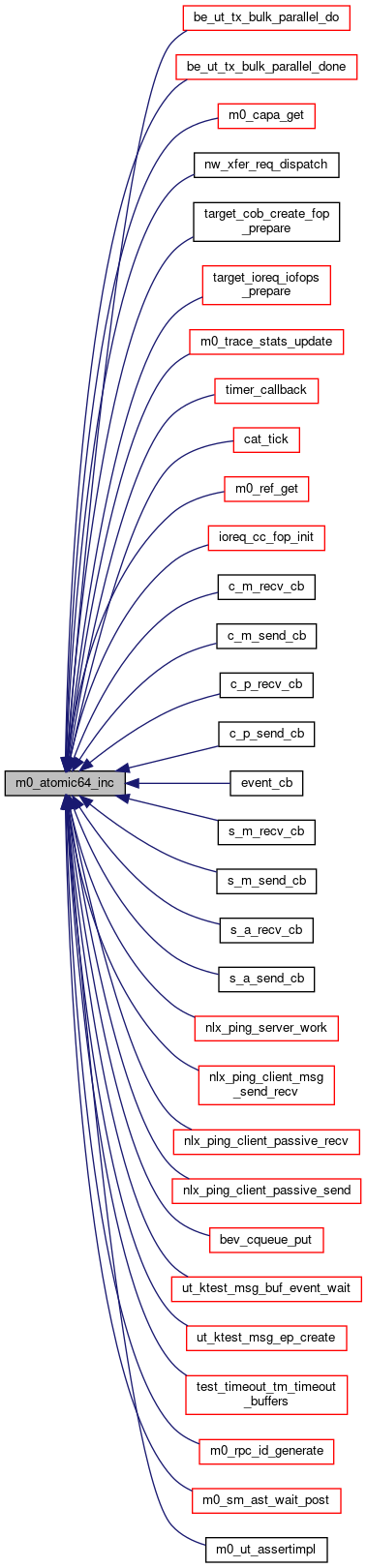

◆ m0_atomic64_inc()

Atomically increments a counter.

◆ m0_atomic64_inc_and_test()

| static bool m0_atomic64_inc_and_test |

( |

struct m0_atomic64 * |

a | ) |

|

|

inlinestatic |

Atomically increments a counter and returns true iff the result is 0.

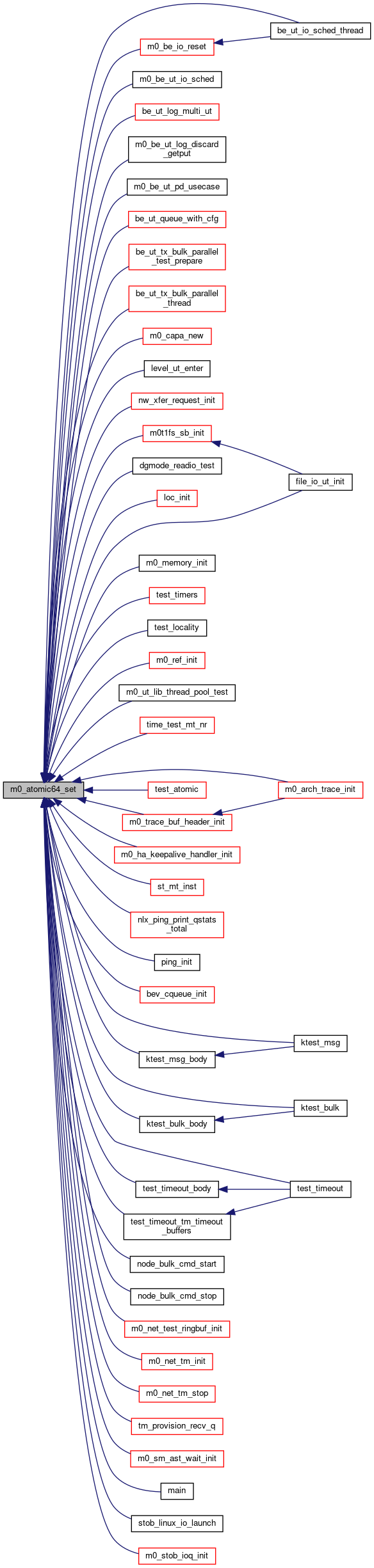

◆ m0_atomic64_set()

| static void m0_atomic64_set |

( |

struct m0_atomic64 * |

a, |

|

|

int64_t |

num |

|

) |

| |

|

inlinestatic |

Assigns a value to a counter.

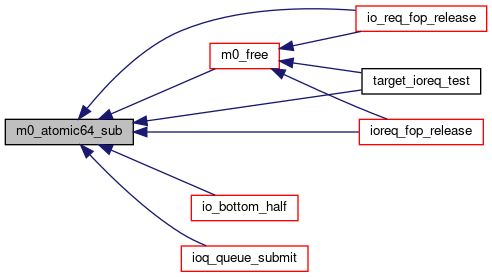

◆ m0_atomic64_sub()

| static void m0_atomic64_sub |

( |

struct m0_atomic64 * |

a, |

|

|

int64_t |

num |

|

) |

| |

|

inlinestatic |

Atomically subtracts given amount from a counter.

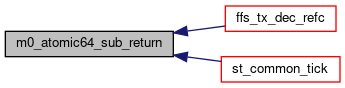

◆ m0_atomic64_sub_return()

| static int64_t m0_atomic64_sub_return |

( |

struct m0_atomic64 * |

a, |

|

|

int64_t |

d |

|

) |

| |

|

inlinestatic |

Atomically decrements a counter and returns the result.

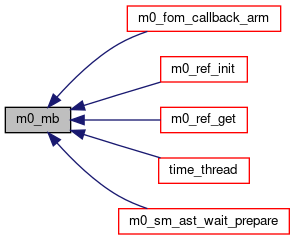

◆ m0_mb()

| static void m0_mb |

( |

void |

| ) |

|

|

inlinestatic |

Hardware memory barrier. Forces strict CPU ordering.