- Overview

- Definitions

- Requirements

- Dependencies

- Design Highlights

- Functional Specification

- Logical Specification

- Conformance

- Unit Tests

- System Tests

- Analysis

- Current Limitations

- Know Issues

- References

Overview

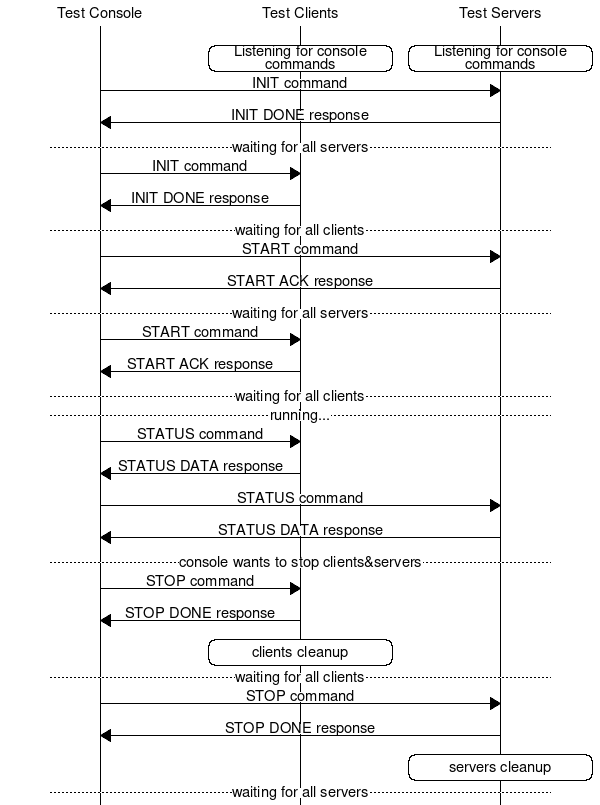

Motr network benchmark is designed to test network subsystem of Motr and network connections between nodes that are running Motr.

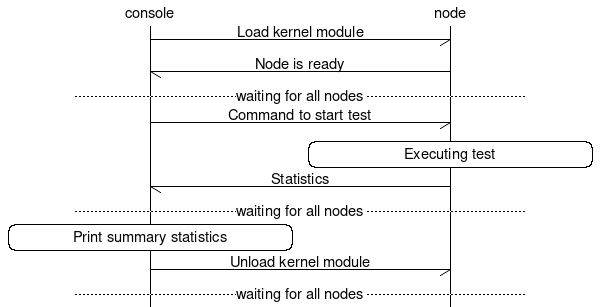

Motr Network Benchmark is implemented as a kernel module for test node and user space program for test console. Before testing kernel module must be copied to every test node. Then test console will perform test in this way:

Definitions

Previously defined terms:

- See also

- Motr Network Benchmark HLD

New terms:

- Configuration variable Variable with name. It can have some value.

- Configuration Set of name-value pairs.

Requirements

- R.m0.net.self-test.statistics statistics from the all nodes can be collected on the test console.

- R.m0.net.self-test.statistics.live statistics from the all nodes can be collected on the test console at any time during the test.

- R.m0.net.self-test.statistics.live pdsh is used to perform statistics collecting from the all nodes with some interval.

- R.m0.net.self-test.test.ping latency is automatically measured for all messages.

- R.m0.net.self-test.test.bulk used messages with additional data.

- R.m0.net.self-test.test.bulk.integrity.no-check bulk messages additional data isn't checked.

- R.m0.net.self-test.test.duration.simple end user should be able to specify how long a test should run, by loop.

- R.m0.net.self-test.kernel test client/server is implemented as a kernel module.

Dependencies

- R.m0.net

Design Highlights

- m0_net is used as network library.

- To make latency measurement error as little as possible all heavy operations (such as buffer allocation) will be done before test message exchanging between test client and test server.

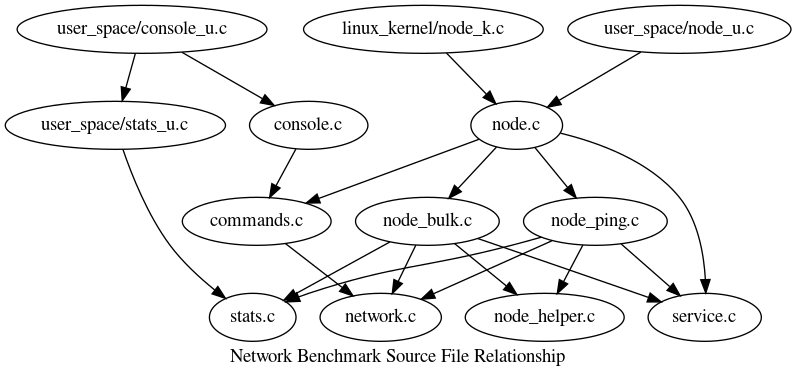

Logical Specification

Component Overview

Test node can be run in such a way:

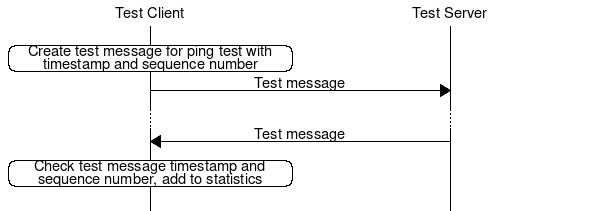

Ping Test

One test message travel:

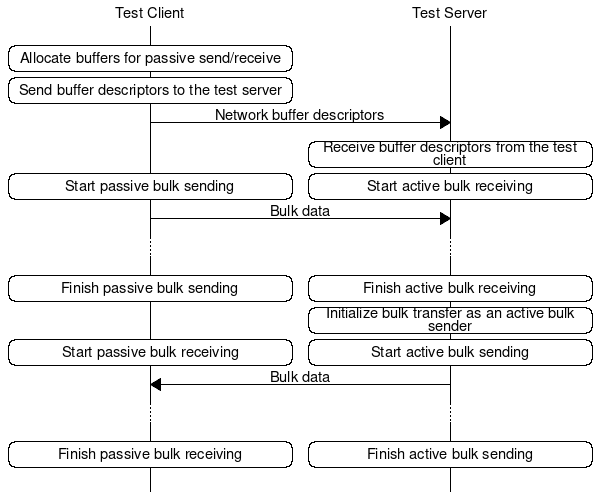

Bulk Test

RTT is measured as length of time interval [time of transition to transfer start state, time of transition to transfer finish state]. See Bulk Test Client Algorithm, Bulk Test Server Algorithm.

One test message travel:

Ping Test Client Algorithm

- Todo:

- Outdated and not used now.

Callbacks for ping test

- M0_NET_QT_MSG_SEND

- update stats

- M0_NET_QT_MSG_RECV

- update stats

- buf_free.up()

State Machine Legend

- Red solid arrow - state transition to "error" states.

- Green bold arrow - state transition for "successful" operation.

- Black dashed arrow - auto state transition (shouldn't be explicit).

- State name in double oval - final state.

Bulk Test Client Algorithm

- See also

- State Machine Legend

Bulk buffer pair states

- UNUSED - buffer pair is not used in buffer operations now. It is initial state of buffer pair.

- QUEUED - buffer pair is queued or almost added to network bulk queue. See RECEIVING state.

- BD_SENT - buffer descriptors for bulk buffer pair are sent or almost sent to the test server. See RECEIVING state.

- CB_LEFT2(CB_LFET1) - there are 2 (or 1) network buffer callback(s) left for this buffer pair (including network buffer callback for the message with buffer descriptor).

- TRANSFERRED - all buffer for this buffer pair and message with buffer descriptors was successfully executed.

- FAILED2(FAILED1, FAILED) - some operation failed. Also 2 (1, 0) callbacks left for this buffer pair (like CB_LEFT2, CB_LEFT1).

Initial state: UNUSED.

Final states: TRANSFERRED, FAILED.

Transfer start state: QUEUED.

Transfer finish state: TRANSFERRED.

Bulk buffer pair state transitions

- UNUSED -> QUEUED

- client_process_unused_bulk()

- add bulk buffer to passive bulk send queue, then add another bulk buffer in pair to passive recv queue

- client_process_unused_bulk()

- QUEUED -> BD_SENT

- client_process_queued_bulk()

- send msg buffer with bulk buffer descriptors

- client_process_queued_bulk()

- QUEUED -> FAILED

- client_process_unused_bulk()

- addition to passive send queue failed

- client_process_unused_bulk()

- QUEUED -> FAILED1

- client_process_unused_bulk()

- addition to passive recv queue failed

- remove from passive send queue already queued buffer

- addition to passive recv queue failed

- client_process_unused_bulk()

- QUEUED -> FAILED2

- client_process_queued_bulk()

- bulk buffer network descriptors to ping buffer encoding failed

- dequeue already queued bulk buffers

- addition msg with bulk buffer descriptors to network queue failed

- dequeue already queued bulk buffers

- bulk buffer network descriptors to ping buffer encoding failed

- client_process_queued_bulk()

- BD_SENT -> CB_LEFT2

- CB_LEFT2 -> CB_LEFT1

- CB_LEFT1 -> TRANSFERRED

- network buffer callback

- ev->nbe_status == 0

- network buffer callback

- BD_SENT -> FAILED2

- CB_LEFT2 -> FAILED1

- CB_LEFT1 -> FAILED

- network buffer callback

- ev->nbe_status != 0

- network buffer callback

- FAILED2 -> FAILED1

- FAILED1 -> FAILED

- network buffer callback

- TRANSFERRED -> UNUSED

- node_bulk_state_transition_auto_all()

- stats: increase total number of number messages

- node_bulk_state_transition_auto_all()

- FAILED -> UNUSED

- node_bulk_state_transition_auto_all()

- stats: increase total number of test messages and number of failed messages

- node_bulk_state_transition_auto_all()

Ping Test Server Algorithm

- Todo:

- Outdated and not used now.

Test server allocates all necessary buffers and initializes transfer machine. Then it just works in transfer machine callbacks.

Ping test callbacks

- M0_NET_QT_MSG_RECV

- add buffer to msg send queue

- update stats

- M0_NET_QT_MSG_SEND

- add buffer to msg recv queue

Bulk Test Server Algorithm

- Every bulk buffer have its own state.

- Bulk test server maintains unused bulk buffers queue - the bulk buffer for messages transfer will be taken from this queue when buffer descriptor arrives. If there are no buffers in queue - then buffer descriptor will be discarded, and number of failed and total test messages will be increased.

- See also

- State Machine Legend

- UNUSED - bulk buffer isn't currently used in network operations and can be used when passive bulk buffer decriptors arrive.

- BD_RECEIVED - message with buffer descriptors was received from the test client.

- RECEIVING - bulk buffer added to the active bulk receive queue. Bulk buffer enters this state just before adding to the network queue because network buffer callback may be executed before returning from 'add to network buffer queue' function.

- SENDING - bulk buffer added to the active bulk send queue. Bulk buffer enters this state as well as for the RECEIVING state.

- TRANSFERRED - bulk buffer was successfully received and sent.

- FAILED - some operation failed.

- BADMSG - message with buffer descriptors contains invalid data.

Initial state: UNUSED.

Final states: TRANSFERRED, FAILED, BADMSG.

Transfer start state: RECEIVING.

Transfer finish state: TRANSFERRED.

Bulk buffer state transitions

- UNUSED -> BD_RECEIVED

- M0_NET_QT_MSG_RECV callback

- message with buffer decriptors was received from the test client

- M0_NET_QT_MSG_RECV callback

- BD_RECEIVED -> BADMSG

- M0_NET_QT_MSG_RECV callback

- message with buffer decscriptors contains invalid data

- M0_NET_QT_MSG_RECV callback

- BD_RECEIVED -> RECEIVING

- M0_NET_QT_MSG_RECV callback

- bulk buffer was successfully added to active bulk receive queue.

- M0_NET_QT_MSG_RECV callback

- RECEIVING -> SENDING

- M0_NET_QT_ACTIVE_BULK_RECV callback

- bulk buffer was successfully received from the test client.

- M0_NET_QT_ACTIVE_BULK_RECV callback

- RECEIVING -> FAILED

- M0_NET_QT_MSG_RECV callback

- addition to the active bulk receive queue failed.

- M0_NET_QT_ACTIVE_BULK_RECV callback

- bulk buffer receiving failed.

- M0_NET_QT_MSG_RECV callback

- SENDING -> TRANSFERRED

- M0_NET_QT_ACTIVE_BULK_SEND callback

- bulk buffer was successfully sent to the test client.

- M0_NET_QT_ACTIVE_BULK_SEND callback

- SENDING -> FAILED

- M0_NET_QT_ACTIVE_BULK_RECV callback

- addition to the active bulk send queue failed.

- M0_NET_QT_ACTIVE_BULK_SEND callback

- active bulk sending failed.

- M0_NET_QT_ACTIVE_BULK_RECV callback

- TRANSFERRED -> UNUSED

- node_bulk_state_transition_auto_all()

- stats: increase total number of number messages

- node_bulk_state_transition_auto_all()

- FAILED -> UNUSED

- node_bulk_state_transition_auto_all()

- stats: increase total number of test messages and number of failed messages

- node_bulk_state_transition_auto_all()

- BADMSG -> UNUSED

- node_bulk_state_transition_auto_all()

- stats: increase total number of test messages and number of bad messages

- node_bulk_state_transition_auto_all()

Test Console

Misc

- Typed variables are used to store configuration.

- Configuration variables are set in m0_net_test_config_init(). They should be never changed in other place.

- m0_net_test_stats is used for keeping some data for sample, based on which min/max/average/standard deviation can be calculated.

- m0_net_test_network_(msg/bulk)_(send/recv)_* is a wrapper around m0_net. This functions use m0_net_test_ctx as containter for buffers, callbacks, endpoints and transfer machine. Buffer/endpoint index (int in range [0, NR), where NR is number of corresponding elements) is used for selecting buffer/endpoint structure from m0_net_test_ctx.

- All buffers are allocated in m0_net_test_network_ctx_init().

- Endpoints can be added after m0_net_test_network_ctx_init() using m0_net_test_network_ep_add().

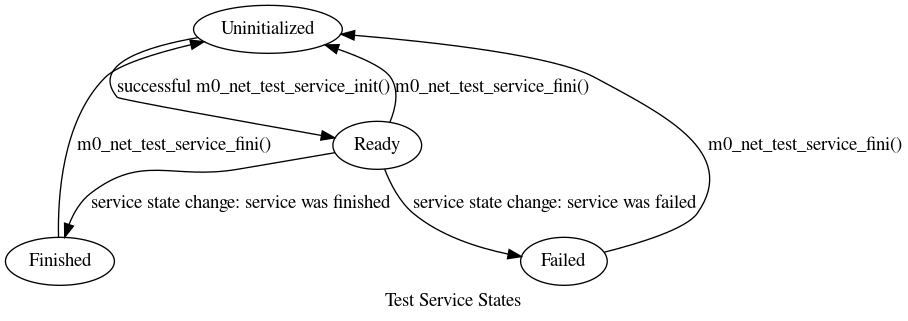

State Specification

Threading and Concurrency Model

- Configuration is not protected by any synchronization mechanism. Configuration is not intended to change after initialization, so no need to use synchronization mechanism for reading configuration.

- struct m0_net_test_stats is not protected by any synchronization mechanism.

- struct m0_net_test_ctx is not protected by any synchronization mechanism.

NUMA optimizations

- Configuration is not intended to change after initial initialization, so cache coherence overhead will not exists.

- One m0_net_test_stats per locality can be used. Summary statistics can be collected from all localities using m0_net_test_stats_add_stats() only when it needed.

- One m0_net_test_ctx per locality can be used.

Conformance

- I.m0.net.self-test.statistics user-space LNet implementation is used to collect statistics from all nodes.

- I.m0.net.self-test.statistics.live user-space LNet implementation is used to perform statistics collecting from the all nodes with some interval.

- I.m0.net.self-test.test.ping latency is automatically measured for all messages.

- I.m0.net.self-test.test.bulk used messages with additional data.

- I.m0.net.self-test.test.bulk.integrity.no-check bulk messages additional data isn't checked.

- I.m0.net.self-test.test.duration.simple end user is able to specify how long a test should run, by loop - see Test console command line parameters.

- I.m0.net.self-test.kernel test client/server is implemented as a kernel module.

Unit Tests

- Test:

Ping message send/recv over loopback device.

Concurrent ping messages send/recv over loopback device.

Bulk active send/passive receive over loopback device.

Bulk passive send/active receive over loopback device.

Statistics for sample with one value.

Statistics for sample with ten values.

Merge two m0_net_test_stats structures with m0_net_test_stats_add_stats()

Script for tool ping/bulk testing with two test nodes.

System Tests

- Test:

- Script for network benchmark ping/bulk self-testing over loopback device on single node.

Analysis

- all m0_net_test_stats_* functions have O(1) complexity;

- one mutex lock/unlock per statistics update in test client/server/console;

- one semaphore up/down per test message in test client;

- See also

- Motr Network Benchmark HLD

Current Limitations

- test buffer for commands between test console and test node have size 16KiB now (see M0_NET_TEST_CMD_SIZE_MAX);

- m0_net_test_cmd_ctx.ntcc_sem_send, m0_net_test_cmd_ctx.ntcc_sem_recv and node_ping_ctx.npc_buf_q_sem can exceed SEMVMX (see 'man 3 sem_post', 'man 2 semop') if large number of network buffers used in the corresponding structures;

Know Issues

- Test console returns different number of transfers for test clients and test servers if time limit reached. It is because test node can't answer to STATUS command after STOP command. Possible solution: split STOP command to 2 different commands - "stop sending test messages" and "finalize test messages transfer machine" (now STOP command handler performs these actions) - in this case STATUS command can be sent after "stop sending test messages" command.

- Bulk test worker thread (node_bulk_worker()): it is possible for the test server to have all work done in single m0_net_buffer_event_deliver_all(), especially if number of concurrent buffers is high. STATUS command will give inconsistent results for test clients and test servers in this case. Workaround: use stats from the test client in bulk test.

References

- Motr Network Benchmark HLD : For documentation links, please refer to this file : doc/motr-design-doc-list.rst

- LNET Self-Test manual

- DLD review request