- Overview

- Definitions

- Requirements

- Dependencies

- Design Highlights

- Functional Specification

- Conformance

- Unit Tests

- System Tests

- Analysis

- References

Overview

The circular queue provides a data structure and interfaces to manage a lock-free queue for a single producer and consumer. The producer and consumer can be in different address spaces with the queue in shared memory. The circular queue is designed to specifically meet the needs of the Core API. In particular see the The Buffer Event Queue section.

The queue implementation does not address how the consumer gets notified that queue elements have been produced. That functionality is provided separately by the Core API nlx_core_buf_event_wait() subroutine.

Definitions

- HLD of Motr LNet Transport : For documentation links, please refer to this file : doc/motr-design-doc-list.rst

Requirements

- r.m0.lib.atomic.interoperable-kernel-user-support The implementation shall provide a queue that supports atomic, interoperable sharing between kernel to user-space.

- r.net.xprt.lnet.growable-event-queue The implementation shall support an event queue to which new elements can be added over time.

Dependencies

- The Atomic API.

Design Highlights

- A data structure representing a circular queue.

- The circular queue efficiently delivers event notifications from the LNet Transport Kernel Core layer to the LNet transport layer.

- Handles atomic access to elements in the queue for a single producer and consumer.

- Handles dynamically adding new elements to the circular queue.

Logical Specification

- Component Overview

- Logic of the Circular Queue

- Cross Address Space Linkage Support

- Circular Queue Allocation

- State Specification

- Threading and Concurrency Model

- NUMA optimizations

Component Overview

The circular queue is a single component.

Logic of the Circular Queue

The circular queue is a FIFO queue. The implementation maintains pointers for the consumer and producer, a count of the number of elements that can currently be consumed, The total number of elements in the queue (both those that are consumable and those that are not currently consumable) and operations for accessing the pointers and for moving them around the circular queue elements. The application manages the memory containing the queue itself, and adds new elements to the queue when the size of the queue needs to grow. In this discussion of the logic, the pointers are named consumer, producer and next for brevity.

The elements starting after consumer up to but not including producer contain data to be consumed (those elements marked with "x" in the diagram). So, consumer follows producer around the circular queue. When consumer->next is the same as producer, the queue is empty (requiring that the queue be initialized with at least 2 elements). The element pointed to by consumer (element "y" in the diagram) is the element most recently consumed by the consumer. The producer cannot use this element, because if it did, producing that element would result in moving producer so that it would pass consumer.

In the context of the LNet Buffer Event Queue, the transport should add enough elements to the queue strictly before it enqueues buffer operations requiring subsequent completion notifications. The number required is the total number of possible events generated by queued buffers, plus one extra element for the most recently consumed event notification. The circular queue does not enforce this requirement, but does provide APIs that the transport can use to determine the current number of elements in the queue and to add new elements.

The element denoted by producer is returned by bev_cqueue_pnext() as long as the queue is not full. This allows the producer to determine the next available element and populate it with the data to be produced. Once the element contains the data, the producer then calls bev_cqueue_put() to make that element available to the consumer. This call also moves the producer pointer to the next element and increments the count of consumable elements.

The consumer uses bev_cqueue_get() to get the next available element containing data in FIFO order. Consuming an element causes consumer to be pointed at the next element in the queue and decrementing the count of consumable elements. After this call returns, the consumer "owns" the element returned, element "y" in the diagram. The consumer owns this element until it calls bev_cqueue_get() again, at which time ownership reverts to the queue and can be reused by the producer.

Cross Address Space Linkage Support

The pointers themselves are more complex than the description above suggests. The consumer pointer refers to the element just consumed in the consumer's (the transport) address space. The producer pointer refers to the element in the producer's (the kernel) address space.

A queue link element (the next pointer in the preceding discussion) is represented by the nlx_core_bev_link data structure:

The data structure maintains separate "opaque" pointer fields for the producer and consumer address spaces. Elements in the queue are linked through both flavors of their next field. The initialization of this data structure is described in Circular Queue Allocation. The opaque pointer type is derived from ::uint64_t. In the case of the producer, the nlx_core_kmem_loc structure is used instead of a pointer. This allows the buffer event object itself to be mapped and unmapped temporarily, rather than requiring all buffer events to be mapped in the kernel at all times (since this could exhaust the kernel page map table in the case of a user space consumer).

When the producer performs a bev_cqueue_put() call, internally, this call uses nlx_core_bev_link::cbl_p_next_loc to refer to the next element (and increment the count of consumable elements). Similarly, when the consumer performs a bev_cqueue_get() call, internally this subroutine uses nlx_core_bev_link::cbl_c_next (and decrements the count of consumable elements). Note that only allocation, discussed below, modifies any of these pointers. Steady-state operations on the queue only modify the consumer and producer pointers.

Because the producer "pointer" is implemented as a nlx_core_kmem_loc, it cannot be accessed atomically. So, a comparison like

cannot be implemented in general, without synchronization. However, by keeping an atomic count of consumable elements, subroutines such as bev_cqueue_is_empty() can be implemented by testing the count rather than comparing pointers.

Circular Queue Allocation

The circular queue must contain at least 2 elements, as discussed above. Additional elements can be added to maintain the requirement that the number of elements in the queue equals or exceeds the number of pending buffer operations, plus one element for the most recently consumed operation.

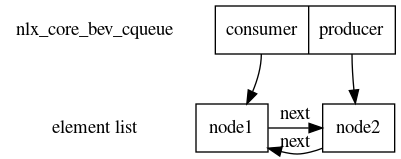

The initial condition is shown below. In this diagram, the queue is empty (see the state discussion, below). There is room in the queue for one pending buffer event and one completed/consumed event.

Before adding additional elements, the following are true:

- The number of elements in the queue, N, equals the number of pending operations plus one for the most recently consumed operation completion event.

- The producer produces one event per pending operation.

- The producer will never catch up with the consumer. Given the required number of elements, the producer will run out of work to do when it has generated one event for each buffer operation, resulting in a state where

producer == consumer.

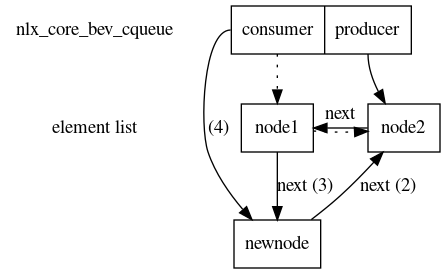

This means the queue can safely be expanded at the location of the consumer pointer (i.e. in the consumer address space), without affecting the producer. Elements are added as follows:

- Allocate and initialize a new queue element (referred to as

newnode) which setsnewnode->c_selfandnewnode->p_self. - Set

newnode->next = consumer->next - Set

consumer->next = newnode - set

consumer = newnode

Steps 2-4 are performed in bev_cqueue_add(). Because several fields need to be updated, simple atomic operations are insufficient. Thus, the transport layer must synchronize calls to bev_cqueue_add() and bev_cqueue_get(), because both calls affect the consumer. Given that bev_cqueue_add() completes its three operations before returning, and bev_cqueue_add() is called before the new buffer is added to the queue, there is no way the producer will try to generate an event and move its pointer forward until bev_cqueue_add() completes. This allows the transport layer and core layer to continue interact only using atomic operations.

A diagrammatic view of these steps is shown below. The dotted arrows signify the pointers before the new node is added. The Step numbers correspond to steps 2-4 above.

Once again, updating the next pointer is less straight forward than the diagram suggests. In step 1, the node is allocated by the transport layer. Once allocated, initialization includes the transport layer setting the nlx_core_bev_link::cbl_c_self pointer to point at the node and having the kernel core layer "bless" the node by setting the nlx_core_bev_link::cbl_p_self_loc field. After the self pointers are set, the next fields can be set by using these self fields. Since allocation occurs in the transport address space, the allocation logic uses the nlx_core_bev_link::cbl_c_next pointers of the existing nodes for navigation, and sets both the nlx_core_bev_link::cbl_c_next and nlx_core_bev_link::cbl_p_next_loc fields. The cbl_p_next_loc field is set by using the cbl_c_next->cbl_p_self_loc value, which is treated opaquely by the transport layer. So, steps 2 and 3 update both pairs of pointers. Allocation has no affect on the producer reference itself, only the consumer pointer.

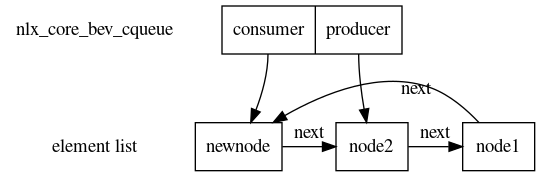

The resultant 3 element queue looks like this:

State Specification

The circular queue can be in one of 3 states:

- empty: This is the initial state and the queue returns to this state whenever the count of consumable elements return to zero.

- full: The queue contains elements and has no room for more. In this state, the producer should not attempt to put any more elements into the queue. Recall that the consumer "owns" the element that it just consumed, so the queue is full when the count of consumable elements is one less than the size of the queue. This state can be expressed as count == (total_number - 1)

- partial: In this state, the queue contains elements to be consumed and still has room for additional element production. This can be expressed as

Recall that the count is stored as a m0_atomic64, so it must be access using m0_atomic64_get(), requiring the use of a temporary variable in the case of testing if the queue is in the partial state.

Threading and Concurrency Model

A single producer and consumer are supported. The variables consumer and producer represent the range of elements in the queue containing data. While the producer is a compound object, with a single producer, no locking is required to access it. The producer cannot safely be accessed by the consumer, since they can be in different address spaces, so an atomic count of consumable elements is used as a surrogate for comparing the consumer and producer. Multiple producers and/or consumers must synchronize externally.

The transport layer acts both as the consumer and the allocator, and both operations use and modify the consumer variable and related pointers. As such, calls to bev_cqueue_add() and bev_cqueue_get() must be synchronized. The transport layer holds the transfer machine m0_net_transfer_mc::ntm_mutex when it calls bev_cqueue_add(). The transport layer will also hold this mutex when it calls bev_cqueue_get().

NUMA optimizations

None.

Conformance

- i.m0.lib.atomic.interoperable-kernel-user-support The nlx_core_bev_link data structure allows for tracking the pointers to the link in both address spaces. The atomic operations allow the FIFO to be produced and consumed simultaneously in both spaces without synchronization or context switches.

- i.net.xprt.lnet.growable-event-queue The implementation supports an event queue to which new elements can be added over time.

Unit Tests

The following cases will be tested by unit tests:

- Test:

- Initializing a queue of minimum size 2

- Test:

- Successfully producing an element

- Test:

- Successfully consuming an element

- Test:

- Failing to consume an element because the queue is empty

- Test:

- Initializing a queue of larger size

- Test:

- Repeating the producing and consuming tests

- Test:

- Concurrently producing and consuming elements

System Tests

System testing will include tests where the producer and consumer are in separate address spaces.

Analysis

The circular queue (the struct nlx_core_bev_cqueue) consumes fixed size memory, independent of the size of the elements contains the queue's data. The number of elements can grow over time, where the number of elements is proportional to the number of current and outstanding buffer operations. This number of elements will reach some maximum based on the peak activity in the application layer. Operations on the queue are O(1) complexity.

References

- HLD of Motr LNet Transport : For documentation links, please refer to this file : doc/motr-design-doc-list.rst

- Writing Lock-Free Code: A Corrected Queue, Herb Sutter, in Dr Dobbs Journal, 2008